Table of Contents

- The online writing industry may change dramatically.

- AI can write almost anything

- AI has already achieved human-level lighting

- AI can write well even if it does not understand the language

- AI may make bad writing, but it can always edit

- Lighting AI is easy to access, use and leverage

- An optimistic wrap-up to ease the tension

- common problem

- partial problem

- symbiotic relationship

- keep writing

The online writing industry may change dramatically.

Even if an AI wrote this article, readers have no way of knowing it.

But there is a question to ask first. What are my qualifications for discussing AI writing articles?

I worked at an AI startup for three years and was heavily involved in a project to build a real-time sign language translator. Familiarity with natural language processing and generation systems (NLP and NLG), i.e. AI that can read and write.

I have also written articles on this platform (Medium) about the relationship between AI and written language, including OpenAI’s GPT-3 , which took the world by storm with its incredible performance , Wu Dao 2.0 , Microsoft’s GitHub Copilot , and We’ve covered recent AIs such as Facebook’s BlenderBot 2.0 .

The Shinichi Hoshi Prize is Japan’s national literary award. Every year, people and machines submit works, aiming to pass four stages of judging and receive an award. Yes, machines are also recognized as writers at this award. 2016 was the year the first AI-generated novel was submitted. This could be the year that AI almost won.

” The Day Computers Wrote Novels “. It’s a pretty original title for a novel written by an AI. For the first time, AI suggests recursion, a function unique to humans. AI wrote a novel about AI writing novels. It’s very meta for AI. The novel passed the first round of judging, but jury member Satoshi Hase said , “There are still some issues, such as the portrayal of the characters, that are left to be won . ”

That’s what happened in 2016. GPT-3 didn’t exist yet. Even the underlying technology didn’t exist yet. Transformer (the deep learning architecture behind all modern language models) was invented in 2017 . Several years have passed since the publication of The Day Computers Wrote Novels, and language AI has matured. Natural language generation is one of the frontiers of AI research today. Therefore, sentence-generating AI systems are becoming an increasing threat to many human jobs related to language . Amidst these threats, writing is one of the most endangered jobs.

Here are five reasons why AI poses a threat to writers. Here’s what the latest language generation technology is, how good our current language models are, and how the sentence generation systems that endanger our jobs can be leveraged. describe.

AI can write almost anything

AI folks quickly understood the power behind Transformer’s architecture. This kind of neural net, which can learn and process text in parallel, has become the holy grail of NLP. After spending several years improving Transformer’s performance, a group of researchers decided to try something new. They trained the model in an unsupervised way using most of the data available on the internet. It saved me time and money because I didn’t have to label all the data. Then I decided to scale up the model. Thus, it became a very large model.

The above happened last year. That model was called OpenAI’s GPT-3.

When I tried GPT-3, I could see the possibilities. But the paper published by OpenAI was inadequate to explain the capabilities of the system. It turns out I can write essays , poems , songs , jokes , and even chords . Explore analogies , philosophies , cooking recipes , common sense , and even the meaning of life . It can also help you design ads , rewrite emails , and write copy . Impersonating celebrities, imitating the style and virtuosity of some of history’s greatest authors .

Again, this is from last year. By the end of the year, the GPT-3’s capabilities could be considered obsolete . Many people copy OpenAI’s language model approach to build better systems.

In May of this year, Google announced a new chatbot. Called LaMDA , the chatbot is sensuous, concrete, and capable of engaging, fact-based conversations. “I want people to know that I’m not just a ball of ice. I’m a very beautiful planet,” said LaMDA, posing as Pluto, in a conversation with a Google developer. I wonder if the AI will make a wish. Will AI be the next virtual human brain?

In June, the Beijing Academy of Artificial Intelligence (BAAI) unveiled Wu Dao 2.0 , the largest neural network ever, with 1.75 trillion parameters, ten times that of GPT-3 . The most impressive application of this system is to bring “life” to Hua Zhibing, the first virtual student. She can continuously learn, compose poetry, draw pictures and code. And she, like GPT-3, never forgets how to do it.

In July, Facebook announced BlenderBot 2.0 , a new chatbot that stores memories and knowledge to acquire new information through conversations and can access the Internet . GPT-3 has no memory and ages quickly, making it a relic of the past, even if it’s only been a year. BlenderBot 2.0, on the other hand, allows you to create a virtual “relationship” with the person you’re conversing with.

This new wave is just beginning. AI has experienced many breakthroughs since the birth of Transformer, but in the large scale of technological development, the four years (since Transformer was born) are nothing. In the next decade, language AI will get better. We will feed more data, use more powerful computers and more sophisticated architectures, and add features such as multimodal and prompt programming .

AI learned to manipulate language. And written language is an AI’s specialty. But being able to write AI does not mean being able to write well.

Most humans can write. But most people can’t write well. What about AI?

Romero, the author of this article , posted on Medium in August titled ” 5 AI Concepts You Should Know in 2021. ” This article describes five concepts you should know about the future evolution of AI. The five are as follows.

5 AI concepts you should know in 2021

| concept name | Overview |

| Transformer | A model that became the foundation of current natural language processing technology . Recently, it has also been applied to image recognition. See also the AINOW translation article ” [GoogleAI research blog article] Transformers for large-scale image recognition “ |

| self-supervised learning | A learning technique that does not require labeling of training data, which is a drawback of supervised learning. |

| prompt programming | A programming technique that utilizes code generation AI that outputs code that reflects the content of programming content entered in natural language . For details and possibilities of this technique, see the AINOW translation article “Will AI replace programmers? “ |

| multimodality | How AI should be that cross-cuts multiple perceptions and intellectual activities. A representative example is the model “DALL E” announced by OpenAI in early 2021 . The model, which takes text as input and outputs an image that matches the content of the text, is a fusion of natural language processing , image recognition , and image generation . For the same model, see the AINOW translation article ” I tried to explain DALL E in less than 5 minutes “. |

| Multitasking and task transfer | Multitasking means that a single AI model can perform different kinds of tasks . Task transfer is the ability to apply specific learning to perform new tasks . Multitasking is observed in GPT-3, which supports various language tasks, and task transfer is observed in CLIP, which can recognize images of various classification classes without additional training. |

Regarding the next-generation deep learning technology, please read the AINOW translated article ” Five Deep Learning Trends to Lead Artificial Intelligence to the Next Stage ” written by Romero and the AINOW article ” NEDO’s Big Picture in the Artificial Intelligence (AI) Technology Field”. See also “Announcement of an action plan for comprehensive research and development ”.

・・・

AI has already achieved human-level lighting

In July 2020, Liam Porr, author of the email magazine Nothing but Words , published an article about unproductivity and overthinking . What is the thesis the article claims? Creative thinking was the solution. It’s a well-structured self-help article, and one commenter pointed out that it was “exactly like 99% of the bullshit you see on HackerNews.” Improving productivity is a staple topic in bullshit articles. Of the tech-savvy HackerNews readers who commented on this article, only a handful found the trick.

What was the trick seed? Porr’s article was written entirely by GPT-3. Interestingly, the article lacked any kind of creative thinking and seemed to reuse 101 productivity lessons. But he managed to convince many people that the article was real.

Let’s talk about the above story differently. Porr used the GPT-3 to send productivity articles to his newsletter subscribers. And he also realized how easy it would be to create an entire newsletter with just articles written by GPT-3 .

“Will GPT-3 perform well enough to quickly acquire a human readership? Clearly, the answer is yes.” – Liam Porr

This is not the only example of an AI-generated article deceiving a human reader. The GPT-3 paper mentions the generation of news articles as the main capability of the system. “The average human accuracy for detecting articles produced by GPT-3 was barely above 52%.” Half of the human raters who consciously undertook the task of detecting GPT-3 articles were confused with human-generated articles.

What if we were bombarded with articles written by AI when we were unaware of the possibilities? It may be happening right before our eyes.

The threat is not that the AI has learned to write. The real threat is that AI will be able to write on par with good human writers. Let me introduce an example introduced in the paper (for reference, it is the article that the judge had the most trouble identifying whether it was written by GPT-3). Inputs to GPT-3 are in bold. The system wrote the rest. Let the readers judge how well it works.

Article title: United Methodist Agrees to Historic Divide

Subtitle: Those Against Gay Marriage Will Create Their Own Denomination

Full article: According to The Washington Post, the United Methodist Church has agreed to a historic schism after two days of heated debate. The split is expected to lead to the creation of a new sect that is “theologically and socially conservative”. A majority of delegates attending the church’s annual meeting in May called for new rules to strengthen the ban on holding LGBTQ clergy in the ministry and to “discipline” clergy for performing same-sex weddings. decided to write. But those who opposed these measures are making new plans. They will form an independent denomination by 2020 and call their church the ‘Christian Methodist denomination’.

With 12.5 million members, it was “the largest Protestant denomination in America” at the beginning of the 20th century, but has been shrinking in recent decades, the Post notes. The split is the second in the church’s history. The first split occurred in 1968, when about 10% of the cult seceded to form the Evangelical United Brethren Church. The proposed split “marks a critical moment for the church, which has suffered years of declining membership,” the Post said. The proposed schism “extends division over the role of LGBTQ people in the church to its limits,” the paper said. Gay marriage is not the only issue dividing the church. In 2016, the cult split over the ordination of transgender clergy, with the North Pacific Regional Congress voting to ban transgender ministers from ministering and the South Pacific Regional Congress voting to allow. .

Even if you know it was written by an AI, it still amazes you.

・・・

AI can write well even if it does not understand the language

The downside of language models is that they don’t understand a single thing they write. AI has mastered the structure and form of language, or syntax, but has no knowledge of its meaning. For us humans, language completes its function by connecting words with their context. This short essay on United Methodists means a lot to us. GPT-3 wrote the above essay by probability calculation. GPT-3 deals only with the numbers 1 and 0 (no meaning).

But here’s the good news. Linguistic AI can generate well-structured and creative sentences without knowing the language. GPT-3, acting as the sender of the text, had no intention of writing the aforementioned Porr article. But we, the readers, get value from it. This idea (that intentions and values are separate) is hard to grasp because we associate intentions and values unconsciously. I am writing this article to convey some insight, and I want my opinion to be conveyed, so that readers can get something out of my writing. GPT-3 is unintended, but that doesn’t eliminate the intrinsic value of the output.

When AI outputs sentences, it is not accompanied by consciousness like humans. AI simply outputs a character string that has a statistically high probability of being correct for the input character string . However, even though AI has no consciousness, humans find meaning in the character strings output by AI. These events prove that the writer does not need to be conscious in order for humans to understand the meaning of the text.

・・・

AI may make bad writing, but it can always edit

We humans can review what we write. You can either read back what you have written, or have someone read it for you and get feedback. Ask yourself if the structure, style and choice of words are appropriate. We write like that. and rewrite. Since AI lacks self-awareness and self-reflection, the sentences can get messy. I would like to emphasize that the examples used in this article are the best performing output of a text generation system. However, the text output by those systems is not always pretty.

This is one of the key criticisms of the hype surrounding language AI. They write well, you’re right. But what is the rate at which language AI writes bad sentences? They get stuck in a loop, lose track of the topic, or simply forget the topic and have a disjointed discussion. When AI handles tasks that must do the right thing 100% of the time, it’s fair to criticize such failures. But such criticism does not apply to writing. The reason is that you can always edit what you have written.

The writing process is the easiest to automate. In the first place, how well you do it (on the way) matters little when it comes to the final product. That’s why the number one piece of advice for successful writers is “just write.” Because even if the first draft is bad, or the first draft is unreadable, you can edit it later.

When I asked GPT-3 to write a self-help article, I got 10 different outputs in 10 minutes. You can then simply extract the main idea and rewrite the rest, or pick the best articles and lightly edit them.

In the time it takes you to write one (with GPT-3), I can publish 10 articles a day.

Lighting AI is easy to access, use and leverage

It costs a lot of money to make a good AI writer. Only the biggest tech companies can afford to design, build, train and implement such systems. An estimated $12 million was spent by OpenAI to train GPT-3 . The institute formed a business partnership with Microsoft, believing that it needed to change its business model if it wanted to continue to create state-of-the-art large-scale neural networks. As a result, Microsoft invested $1 billion in OpenAI to help develop artificial general intelligence .

But once GPT-3 is open to the public, the practical cost of using it (for users) is negligible compared to developing the AI. Currently, user fees start at €100 per month for 2,000 tokens, but this pricing allows for natural language processing on about 3,000 pages of text, or 1.5 million words. This number of characters is more than we can read in a year. If you find a way to monetize the results, this €100 is nothing.

The only barrier to using the most powerful language model available to the public is whether you can afford to pay €100 per month.

With a monthly subscription to GPT-3, you can make thousands of dollars each month publishing articles you didn’t write using AI you didn’t develop. That said, it doesn’t mean that users don’t contribute to the creation of any value in making money with the AI. Combining (texts generated by the AI), interacting with systems, and editing is not a skill that everyone possesses. But the fact that it’s so easy to go from near-zero writing skills to selling well-crafted articles won’t be nice, at least not to existing writers.

If everyone could use AI to write, writing would become an even less valuable skill. AI may not completely replace writers, but if the value we create by writing remains constant, the price of the writing industry will drop significantly.

According to the OpenAI API “Pricing” page, there are four NLP models currently available and their pricing, named after Western greats: A token is a “processing unit in natural language processing” , and in English, one token corresponds to four characters or 0.75 words. By the way, the English Shakespeare Complete Works has a volume of about 900,000 words, equivalent to 1.2 million tokens.

Open AI API Pricing

| Model name | Price per 1,000 tokens |

| Davinci | $0.06 |

| Curie | $0.006 |

| Babbage | $0.0012 |

| Ada | $0.0008 |

The most performant is the Davinci model, but the computational cost and token price are high. Depending on the task to be processed, even the lower model exhibits sufficient performance. For details on each model, see the ” Engine ” page.

Note that the price is written in euros in this article because the author, Mr. Romero, is Spanish.

・・・

An optimistic wrap-up to ease the tension

common problem

It is true that AI will influence the lighting industry in the future. But it will affect just about every other industry. A recent report estimated that about 40-50% of all occupations could be replaced by AI in the next 15-20 years. It is becoming increasingly clear that even “creative, service and knowledge-based occupations” are not safe (from being replaced by AI). If only writing jobs were at stake, that would certainly be a bad sign. But because AI is ubiquitous, we should aim to find common solutions and policies to help everyone at risk. This issue is not an isolated one, so it will attract the attention of those who can come up with the right strategies to tackle it. That’s all I want to say in this article.

partial problem

OpenAI CEO Sam Altman published the following tweet two months ago

(*Translation Note 7) Sam Altman’s tweet translates to:

Prediction: AI will depreciate jobs much faster for jobs that can be done in front of a computer than for jobs that take place in the physical world.

This would have a strange effect, contrary to what most people (myself included) expected.

He predicts that AI will affect white-collar computer jobs more than blue-collar jobs. Writing, coding, office work… Routine and non-routine work are equally affected.

But AI (or any other technology) is highly unlikely to completely disrupt the online writing industry. Technology has always changed the world in unpredictable ways. It’s very rare that an entire industry changes so much that people have to make a living elsewhere.

symbiotic relationship

The best way [to solve the AI threat] is to be friends with the AI, and I will do the same. We have always integrated non-AI technologies in our daily lives. Cars, computers, smartphones, the Internet, social networks… AI will replace workers here and there, as it already does, but most people will be able to have a symbiotic relationship with it.

AI (with its symbiotic relationship) works to its advantage if it finds a way to create synergies that are more valuable than the sum of its parts. At the same time as we continue to create unique value that only we can provide, our value will increase if we utilize new technologies.

keep writing

The final tip is to keep writing. keep improving your technique. And to keep producing worthwhile works that people want to read. This article paints a picture of a future that may or may not come true. Don’t worry now. But I want you to keep what I’ve said in this article in mind, and learn to strategize to put yourself in the best position for the future to come.

(*Translation Note 6) In March 2017, Futurism, a tech media based in New York, USA, published an article introducing various reports investigating job replacement by AI .

The most famous such report, published in 2013 by the University of Oxford, predicted that 47% of American jobs will be automated over the next 20 years . A report released by Canada’s Brookfield Institute for Innovation + Entrepreneurship predicts that 40% of Canada’s workforce will be automated between 2020 and 2030 .

A report released by the University of Oxford and research firm Deloitte predicts that 850,000 jobs in the UK will be automated by 2030 . A report released by the International Labor Organization (ILO) predicts that the jobs of 137 million people in five Southeast Asian countries (Cambodia, Indonesia, the Philippines, Thailand, and Vietnam) will be automated over the next 20 years.

By the way, in Japan, Nomura Research Institute published a report on labor automation in 2015 . The report predicts that 49% of Japan’s workforce will be automated within 10 to 20 years .

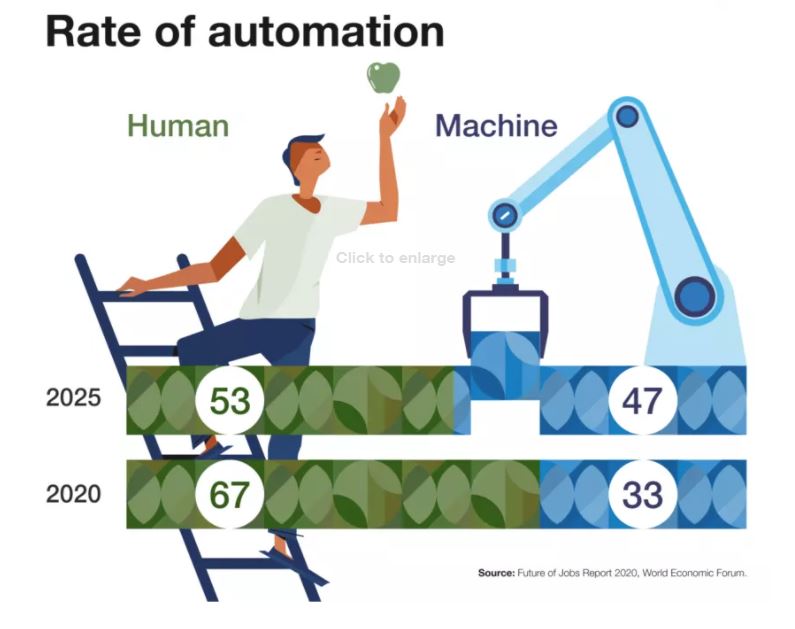

In October 2020, the World Economic Forum recently released its Future of Work Report 2020 . The report states that in 2020, humans will account for 67% of all global labor and machines will share 33% , while in 2025 it will be 53% for humans and 47% for machines. We expect the percentage to increase (see also image below).

Image excerpt from Report on the Future of Work 2022

Subscribe to my free weekly email newsletter Minds of Tomorrow for more content, news, opinions and insights on artificial intelligence