Table of Contents

- preface

- Significance of multimodal and universal

- Running T-Bletchley

- Universal text-to-image search

- Code switch search

- Image-to-image search

- Understanding text in images

- T-Bletchley: model development

- data set

- Model architecture and training

- Detailed model evaluation

- English

- Universal

- the next deployment

preface

Today, the Microsoft Turing team is proud to introduce Turing Bletchley, a universal image language representation model (T-UILR) with 250 million parameters capable of performing image language tasks in 94 languages. I am pleased to The model features an image encoder and a universal language encoder that vectorize input images and text, respectively, such that semantically similar images and text are aligned with each other. This model has uniquely powerful capabilities and represents a breakthrough in image language understanding.

T-Bletchley outperforms state-of-the-art models like ALIGN developed by Google for English image language datasets (ImageNet, CIFAR, COCO), and for universal image language datasets (Multi30k, COCO) It outperforms MULE , SMALR and M3P . If you want to see T-Bletchley in action, try the demo .(*Translation Note 1) Microsoft is promoting a project called ”

Project Turing ” with the goal of “making it machine readable and writable .”

In addition to the language model Turing Bletchley introduced in this article, the project announced a language model MT-NLG with 530 billion parameters in October 2021 .

The project name “Turing” is named after Alan Turing , who is famous for his Turing test .Turing Bletchley’s “Bletchley” is thought to have originated in Bletchley Park

, England. During

World War II, the school was home to the Government Code School, where Alan Turing worked. It now houses the

National Museum of Computing , with a reconstructed code-breaking machine Colossus on display.

Significance of multimodal and universal

Image representing “Beautiful sunset on the beach”

Words and vision are intrinsically linked. When we hear the phrase “a beautiful sunset on the beach” we imagine images like the one above. A model that focuses solely on language fails to capture this connection. In such a model, sentences are nothing more than grammatically correct sequences of words.

Furthermore, vision is also a global modality. No matter what language one speaks of the same scene of a beach sunset ( “una hermosa puesta de sol en la playa” (Spanish), “un beau coucher de soleil sur la plage” (French), “Matahari terbenam yang indah di pantai” (Indonesian ), the corresponding visual representation does not change. Traditional multimodal models have failed to capture this universal property of vision by tying vision to a specific language (most commonly English).

T-Bletchley solves these two drawbacks. Through a multimodal approach, the model advances the computer’s ability to understand language and understand images as-is (without textual information) in pixels alone. In addition, we take a universal-first approach to consider language modalities when developing our models. The result is a unique universal multimodal model that understands images and text in 94 different languages, and it works great. For example, the model utilizes a common image-language vector space to match textual descriptions provided in any language without extra information such as metadata or surrounding text (contained in images). You can search for images that You can also find images that answer text-based questions written in any language, or images that are semantically similar to another image.

Running T-Bletchley

To verify the ability of T-Bletchley, we built an image retrieval system that randomly sampled 30 million web images that the model did not see during training. These images were encoded by an image encoder and stored in the index without any textual metadata such as captions or alt-text.

We built two types of search systems: text-to-image search and image-to-image search (for functional verification of T-Bletchley). I also vectorized the input query (using the text encoder for text-to-image searches and the image encoder for image-to-image searches). Using the encoded vector as a key, we query the index to find the nearest neighbor in the vector space using HNSW, an approximate nearest neighbor (ANN) algorithm. The nearest neighbor images thus obtained are then displayed as search results.

Today’s image search systems rely heavily on the textual metadata available in images such as image captions, alt-text, surrounding text, and image URLs. A feature of T-Bletchley is that image retrieval can be performed using only encoded image vectors and does not use any textual metadata. This feature is a big step towards true image understanding compared to current systems. Additionally, the model demo was built using a pre-trained model as-is, without fine-tuning it for the image retrieval task.

Also, current image retrieval systems use object tagging (tagging) algorithms, and images to which this algorithm is applied are augmented with textual metadata (i.e., the It adds tags for cars, houses, waves, etc.). Since object tagging systems are trained on human-labeled data, the number of classes (tags) is very limited. Because T-Bletchley was trained on unsupervised data, it understands a great many objects, actions, and many real-world concepts (dancing, programming, racing, etc.).

Below is an example demonstrating the power of T-Bletchley in an image retrieval system.(*Translation Note 3) The HNSW (Hierarchical Navigable Small World) algorithm is one of the nearest neighbor search techniques

announced in 2016 .

A high-performance nearest neighbor search is realized by using a hierarchical graph.

In a blog post published by AI startup Pinecore , HNSW is illustrated graphically.

Universal text-to-image search

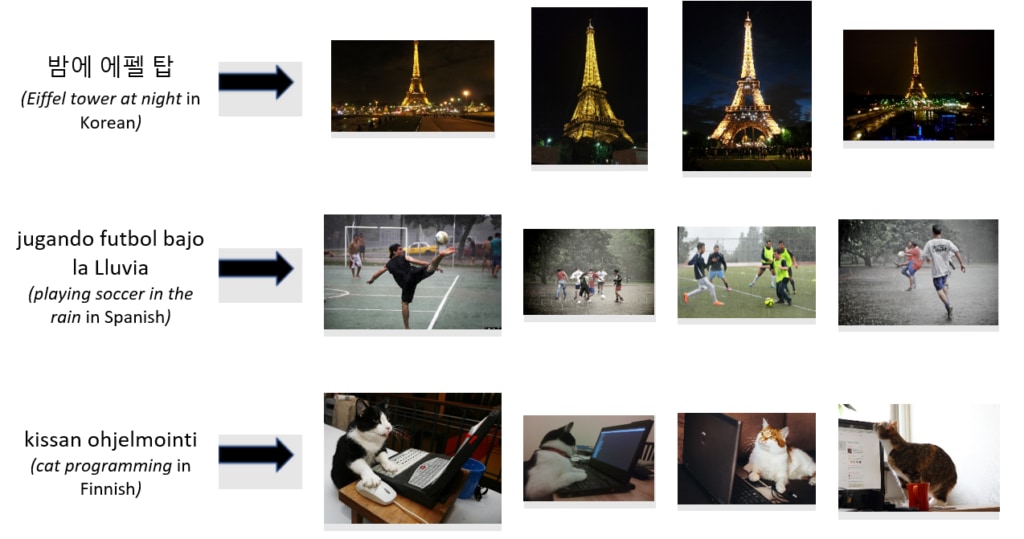

Here are some examples of images retrieved with text-based queries written in multiple languages.

The third example shows T-Bletchley “understanding” the act of programming and generating a vector subspace dedicated to the image the cat is programming. True image understanding can be used to improve current search systems to focus more on the image itself.

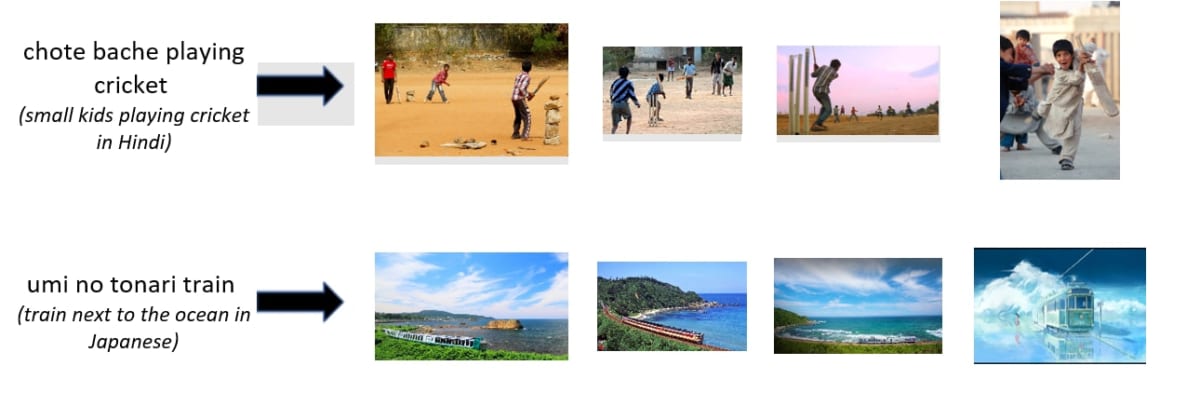

Code switch search

T-Bletchley can search images from queries written in English scripts, including non-English languages!

T-Bletchley can understand texts containing multiple languages and scripts.

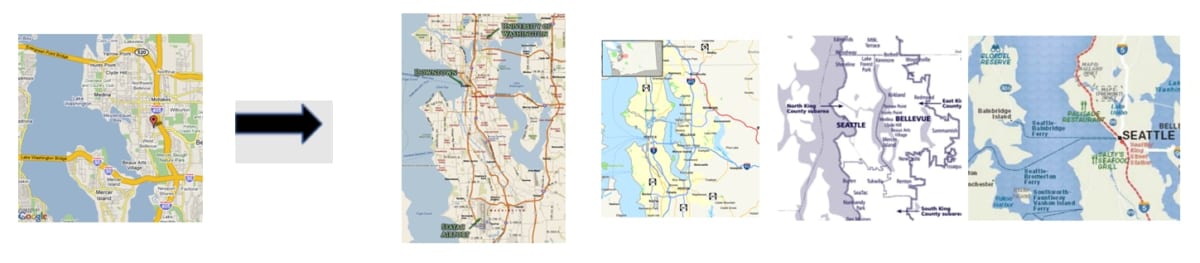

Image-to-image search

To evaluate image retrieval, we encoded a given image by an image encoder and searched the index for the nearest image vector and its corresponding image. T-Bletchley is trained to choose the best caption for an image, so it tends to prefer images that are semantically similar to images that are visually similar.

(*Translation 4) The above image search results show a map of the Seattle area in the United States, with different map scales and display areas .

The images retrieved by T-Bletchley are not necessarily similar in appearance to the query image. However, images of the same geographical conditions are “semantically similar”. The model does not return search results from a set of searches that look similar to the input image, such as:

Understanding text in images

T-Bletchley can understand text in images without using OCR technology. In the example below, the images are passed directly to the image encoder, stored as 1024-dimensional vectors, and only the cosine similarity between these vectors is used to search for similar images.

In the first example, T-Bletchley understands that the text in the image is about the difference between microeconomics and macroeconomics, and searches for similar slides. In the second example, the model is searching for images related to COVID-19, even though the training data is pre-COVID-19.

These in-image text recognition features are universal and can be used in multiple languages. In the example below, we are searching in French and Arabic.

T-Bletchley: model development

data set

T-Bletchley was trained using billions of image-caption pairs extracted from the web.

An example of this dataset is shown below.

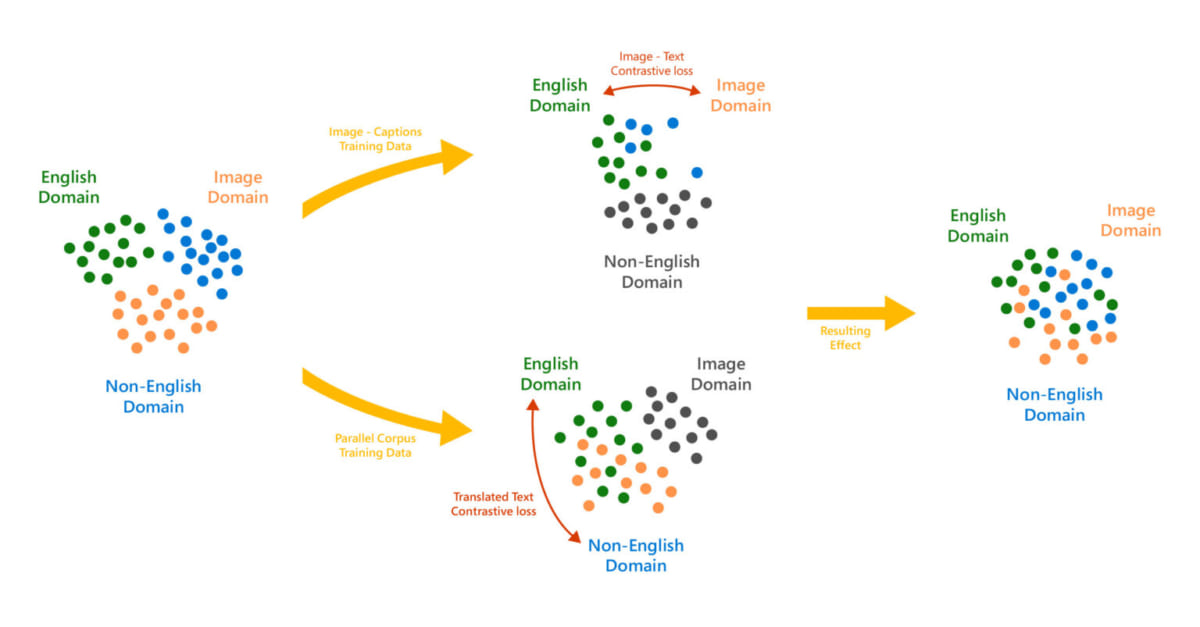

A large and diverse training dataset provided a robust model that could handle a wide variety of images. To achieve universality, we trained the model on a parallel corpus of 500 million translation pairs. These pairs were created by extracting web page sentences along documents from a popular crawl corpus. In addition, by adding a “translation sentence comparison task,” we were able to create language-independent vector representations of captions, enhancing the universality of the model.

Model architecture and training

T-Bletchley consists of Transformer-based image and text encoders, both of which architectures are similar to that of BERT-large .

Images and captions were coded independently, and the model was trained by applying symmetric loss to the generated image and text vectors. Similarly, for language-agnostic representation, we encoded the national-language sentences in the translation pairs independently and applied contrast loss to the batches of vectors generated.

In this way, we were able to align the captions written in various languages corresponding to the images, even though the image caption pairs were primarily in English.

We leveraged kernels from the DeepSpeed library (which is compatible with PyTorch) to implement Transformer and the ZeRO optimizer to train the model .Contrastive loss is the loss

calculated from the distance between two points in embedding space . In the context of this article, we encoded each image and its descriptive caption into a vector, then trained using the distances calculated from these vectors.DeepSpeed is an open source library for efficiently training large language models developed by Microsoft and compatible with PyTorch

. ZeRO , a library included in DeepSpeed, is a new parallelization optimizer that greatly increases the number of parameters that can be trained, while significantly reducing the resources required for parallel processing of language models and data . For more information on DeepSpeed and ZeRO, see the Microsoft Research blog post ZeRO and DeepSpeed: A New Optimization System Enables Training Models with Over 100 Billion Parameters .

Detailed model evaluation

T-Bletchley set state-of-the-art records on multiple public benchmarks.

English

For the English evaluation, we followed the prompt engineering and ensemble done in the ALIGN paper published by Google. T-Bletchley beats Google’s ALIGN model in the English image language benchmark and sets a new standard in zero-shot image classification pioneered by OpenAI’s CLIP model .

| Model name | ImageNet | CIFAR-100 | CIFAR-10 | COCO R@1 image to text | COCO R@1 text to image |

| ALIGN | 76.4 | – | – | 58.6 | 45.6 |

| T-Bletchley | 79.0 | 83.5 | 97.7 | 59.1 | 43.3 |

When fine-tuned for search, the T-Bletchley outperformed its predecessor, the state-of-the-art ALIGN, by more than two points on the COCO test set.

| Model name | Flickr 30k Recall @1 | COCO Recall @1 | ||

| image to text | text to image | image to text | text to image | |

| OSCAR | – | – | 73.5 | 57.5 |

| ALIGN | 95.3 | 84.9 | 77.0 | 59.5 |

| T-Bletchley | 97.1 | 87.4 | 80.2 | 62.3 |

T-Bletchley produces state-of-the-art results compared to English-only models on English-specific tasks. The English performance of the same model has not deteriorated even with universal language support!In June 2021, a research team at Google published a paper titled ”

of training an image-language representation model using large-scale uncleaned (noisy) training data . The large-scale model with noisy learning discussed in the paper was named Large-scale ImaGe and Noisy-text embedding, or ALIGN for short. For the performance evaluation of the above models, the prompt ensemble method, which was used to evaluate the performance ofthe image language representation model CLIP developed by OpenAI, was adopted . Specifically, given an arbitrary image as input, the output is a text prompt of “A photo of a {image classification name}”.(*Translation Note 8)

CLIP is an image classification model announced by OpenAI in January 2021.

It achieves high-accuracy classification without fine-tuning for a specific classification task .

Universal

The universal search ability of T-Bletchley was evaluated on the Multi30k, COCO-CN, and COCO-JP datasets and compared with multilingual models. Even before fine-tuning, the model significantly outperforms its predecessors.

| Experimental settings | Model name | Multi30k | COCO | |||

| French | German | Czech language | Chinese | Japanese | ||

| zero shot | M3P | 27.1 | 36.8 | 20.4 | 32.3 | 33.3 |

| T-Bletchley | 85.0 | 83.2 | 81.2 | 81.5 | 64.8 |

Once T-Bletchley is fine-tuned, this model will set new state-of-the-art results in multiple languages, as shown in the table below.

| Experimental settings | Model name | Multi30k | COCO | |||

| French | German | Czech language | Chinese | Japanese | ||

| zero shot | MULE | 62.3 | 64.1 | 57.7 | 75.6 | 75.9 |

| SMALR | 65.9 | 69.8 | 64.8 | 76.7 | 77.5 | |

| M3P | 73.9 | 82.7 | 72.2 | 86.2 | 87.9 | |

| T-Bletchley | 94.6 | 94.3 | 93.6 | 89.0 | 86.3 |

The next deployment

T-Bletchley’s goal is to create a model that seamlessly understands text and images in the same way that humans do. The first version of the model will be an important breakthrough in this mission. The model is expected to improve the image question and answer, image search, and image-to-image search experiences in Bing, Microsoft Office, and Azure.