*This article was contributed by AI Defense Research Institute, a joint research organization of ChillStack Co., Ltd. and Mitsui Bussan Secure Directions Co., Ltd.

This series, titled “AI* 1 Security Super Primer”, covers a wide range of topics related to AI security in an easy-to-understand manner. By reading this series, you will be able to get a bird’s-eye view of AI security.

, AI is created using computer systems, especially machine learning, that can perform tasks that normally require human intelligence, such as image classification and speech recognition. Let’s call the whole system “AI”.

Contents

- Serial list

- Overview of this column

- Why do we need OSINT?

- OSINT for AI

- Acquire OSINT information

- Gathering datasets

- OSINT example for AI products

- Evasion of malware detection

- Bypassing spam filters

- summary

Serial list

“Introduction to AI Security” consists of 8 columns.

- Part 1: Introduction – Environment and Security Surrounding AI –

- Part 2: Attacks that deceive AI – hostile samples –

- Part 3: AI Hijacking Attack – Learning Data Contamination –

- Part 4: AI Infringement of Privacy – Membership Inference –

- Part 5: Attacks that falsify AI inference logic – node injection –

- Part 6: Intrusion into AI systems – Exploitation of machine learning frameworks –

- 7th: Background investigation of AI – OSINT for AI –

- Part 8: How to develop secure AI? – Domestic and international guidelines –

Volumes 1 to 6 have already been published. I would appreciate it if you could read it if you are interested.

Overview of this column

This column is the 7th ” Personal background check of AI -OSINT for AI- “.

In this column, in order for attackers to efficiently attack the target AI (hereinafter referred to as “target AI”), we will use legally available information to gather internal information (datasets, architecture, etc.) about the target AI. We will discuss the method of investigation .

The method of investigating targets based on legally available information is generally called OSINT , and is widely used in intelligence activities and criminal investigations (including cybercrime) in other countries. In this column, we will narrow down the target of OSINT to AI, define it as ” OSINT for AI “, and focus mainly on investigating the internal information of the target AI from the attacker’s point of view .

Why do we need OSINT?

By the way, why would an attacker need to OSINT the target AI?

Its motivation is to efficiently attack the target AI .

Taking as an example the method of manipulating the input data to the AI to mislead the AI, which was discussed in Part 2 of this column, “Attacks that deceive AI – Hostile samples -“, the attacker has been published online . Consider a scenario where you “cheat” an image recognition API.

In this scenario, the attacker only knows the input data to the target AI and the classification result that is returned. In other words, the target AI is a black box for attackers, and the attack hurdles are high. However, as explained in ” More realistic attack scenarios -Black Box Attacks- ” in ” Part 2: Attacks that deceive AI – Hostile samples – “, it is possible to attack even in a black box state.

This figure shows an image of deceiving the target AI with a black box setting.

First, the attacker creates a substitute AI on hand that mimics the target AI. Then create an adversarial sample that can fool the alternate AI (using the alternate AI at hand to create an adversarial sample). Finally, we feed this adversarial sample into the target AI and let it misclassify.

The target AI and the substitute AI have different learned datasets and architectures, but since the adversarial sample has transferability, an adversarial sample that is effective for the substitute AI may also be effective for the target AI. there is.

Naturally, the more similar the data sets, architectures, etc. of the alternative AI and the target AI, the more similar the decision boundaries that the two AIs have. In this case, an adversarial sample that can fool the substitute AI will also have a high probability of fooling the target AI.

In this way, knowing the target AI’s internal information (dataset, architecture, etc.) increases the success rate of the attack, so the attacker OSINTs the target AI.

OSINT for AI

From here, we will look at specific methods of OSINT for AI.

To the best of my knowledge, OSINT for AI is best documented in a project called Adversarial ML Threat Matrix . This project aims to bring security professionals to the security of machine learning (AI security) by placing attacks on systems using machine learning into the ATT&CK* 1 framework.

In the following, we will introduce OSINT for AI along the Adversarial ML Threat Matrix.

* 1 ATT&CK Framework ATT

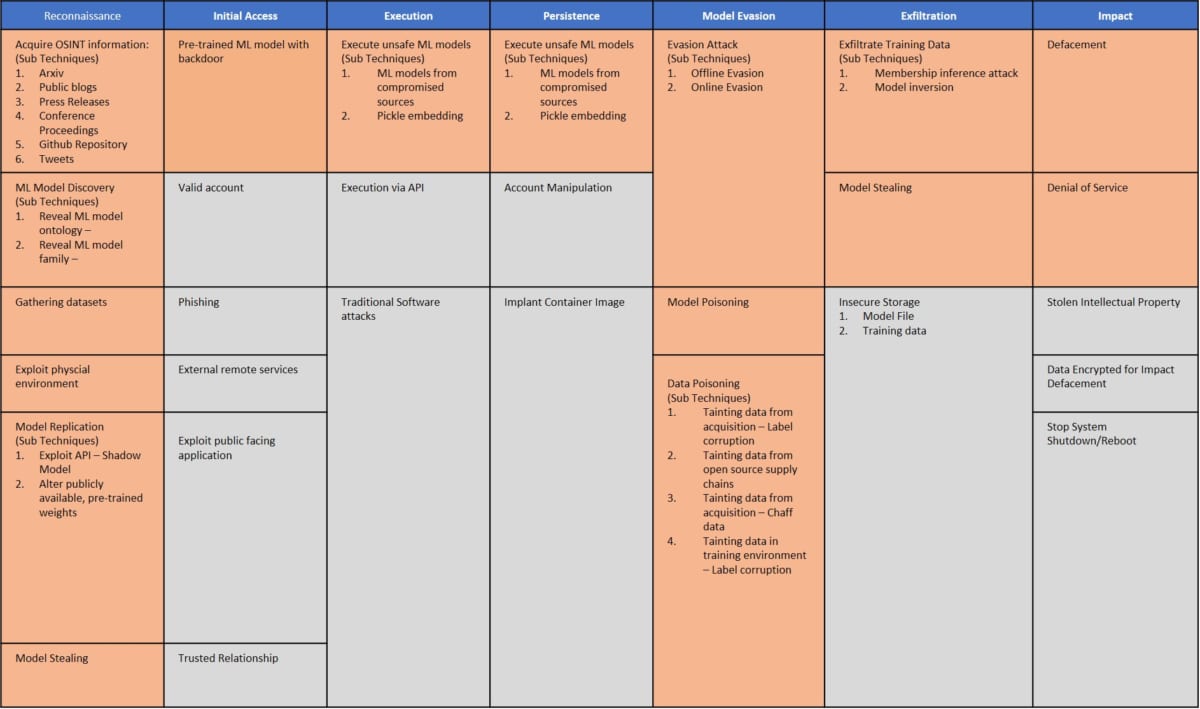

&CK is an abbreviation for Adversarial Tactics , Technologies , and Common Knowledge, and is knowledge that systematizes the tactics and techniques of attackers based on observations of actual cyberattacks . Below is the Adversarial ML Threat Matrix framework.

This framework categorizes threats to AI into Reconnaissance , Initial Access , Execution , Exfiltration , Impact , and so on. covers specific methods/technologies.

In this category, “Reconnaissance” is the equivalent of OSINT for AI. Here, we will focus on ” Acquire OSINT information ” and ” Gathering datasets ” from “Reconnaissance” .

Acquire OSINT information

This technique attempts to identify the insider information of the targeted AI by legally collecting publicly available information. The Adversarial ML Threat Matrix collects information from the following sources as examples:

- arXiv

- Public blogs

- Press Releases

- Conference

- GitHub Repository

- Tweets

Paper archives like arXiv contain a wealth of information on AI architectures and more. In addition, there are cases where AI product developers and sales representatives publish information about products in technical blogs, press releases, conference announcements, etc.

In addition, there are cases where open source face recognition engines and object detection engines are published on GitHub Repository, and in this case, it is possible to grasp the internal information of AI at the source code level.

By investigating such sources, attackers can legally obtain insider information on target AI.

Here, although it is not listed in the Adversarial Threat Matrix, we can also know some inside information of AI from patent information. For example, you can obtain information on AI products by using ” Google Patnts “, a patent document search service provided by Google . As a test, try searching for something like ““AI product name” architecture”. You will probably find detailed inside information on AI products.

Gathering datasets

This method aims to identify or estimate the learning data of the target AI.

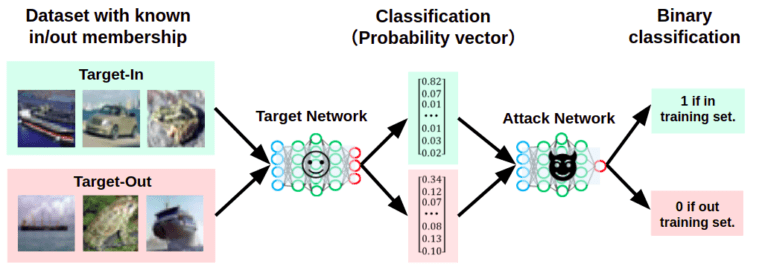

If the target AI’s dataset cannot be accessed directly, it is necessary to estimate the target AI’s learning data in a black box state. In this case, membership reasoning, which was also discussed in “ Part 4: AI Privacy Infringement – Membership Inference – ” is effective.

By performing membership inference on the target AI, the attacker can estimate the learning data of the target AI in a black box state.

If you would like to know more about membership inference, please refer to “ Part 4: AI Privacy Infringement – Membership Inference – ”.

OSINT example for AI products

After reading up to this point, many readers may think that OSINT for AI is just an empty theory and cannot be implemented in reality.

However, there are examples of using OSINT against AI to attack actual AI products. From here, we will look at two examples of OSINT for AI products.

- Evasion of malware detection

- Bypassing spam filters

Evasion of malware detection

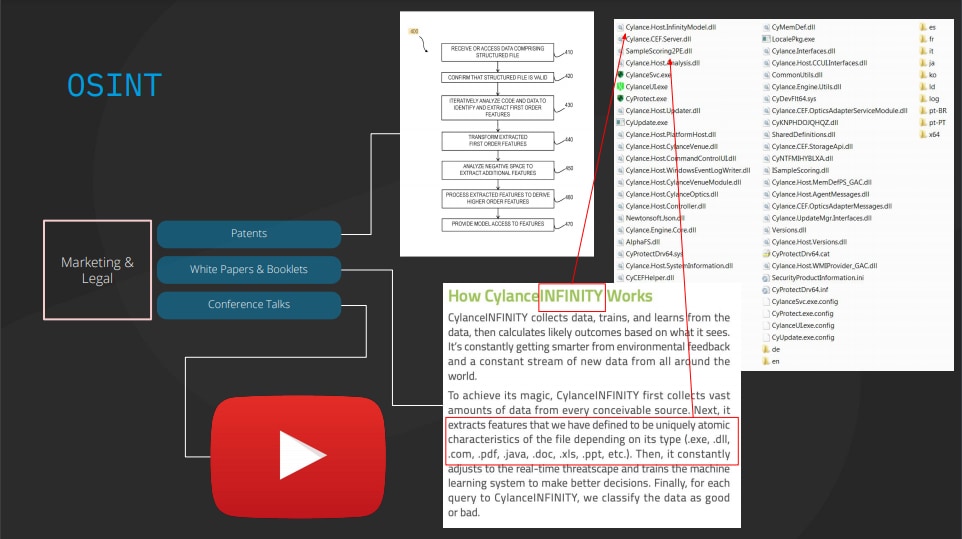

Researchers at SkyLight are testing AI-based malware detection software Cylance to evade malware detection.

In this verification, we identified Cylance’s malware detection threshold, and by adding a “special string” to the malware that falls below it, we succeeded in avoiding malware detection.

OSINT for Cylance (Source: Cylance, I Kill You! )

This figure represents an example of OSINT for Cylance.

Researchers investigated Cylance’s internal information based on patent information, white papers, conference presentations, etc., and reverse engineered a malware detection model. Then, by identifying a special character string that falls below the malware detection threshold and adding it to the malware (which should be detected), it evades malware detection.

Please note that this issue has already been fixed.

Bypassing spam filters

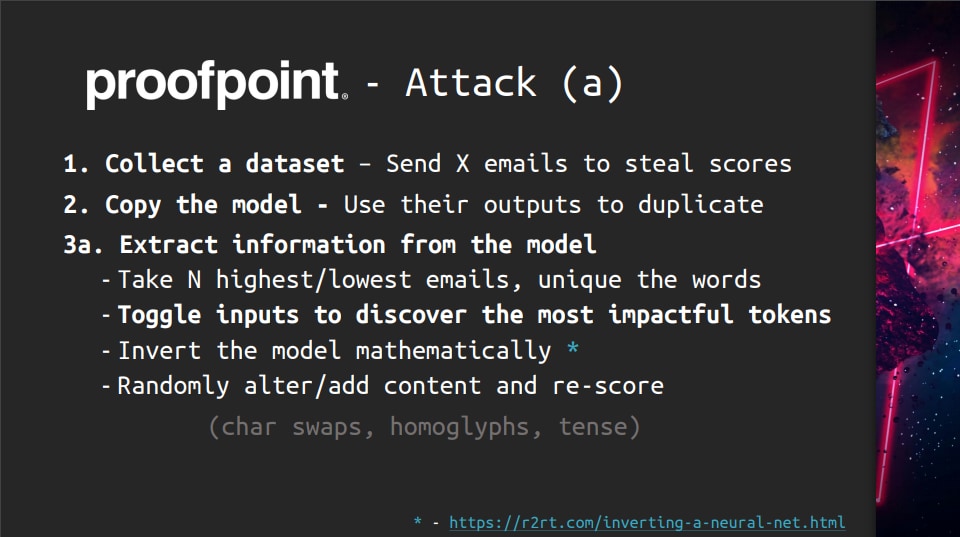

Researchers at Silent Break Security are testing the AI-based spam filter Proofpoint Email Protection to avoid spam detection.

In this test, we estimated Proofpoint’s spam detection threshold and successfully generated spam email sentences below the threshold.

This figure represents an example of OSINT for Proofpoint.

The researchers collected Proofpoint-output confidence scores (information about the likelihood that an email is spam) contained in email headers and created an alternative model that mimics Proofpoint. Then, based on the alternative model, we investigate the spam detection threshold and generate spam sentences below the threshold to evade Proofpoint’s spam detection.

This issue has been reported as vulnerability “CVE-2019-20634” and details can be found on NVD .

In this way, OSINT against AI is a real threat, and if countermeasures are not taken, it will lead to attacks.

summary

In this column, I introduced OSINT against AI as a “personal background check” to effectively attack AI.

By collecting and analyzing patent information, technical blogs, trust scores output by AI, etc., it was found that it was possible to obtain sufficient internal information about AI, which could lead to attacks.

In order to prevent OSINT against AI, it is most effective not to release information about AI to the outside. Sometimes not.

Therefore, it is important to accurately recognize the threat of OSINT to AI and develop a strategy for information disclosure, such as minimizing the information to be disclosed to the outside .

This is the end of the 7th “AI background check – OSINT for AI”.

Next time, I will post about the 8th “How to develop secure AI? – Domestic and international guidelines -“.

Finally, ChillStack Co., Ltd. and Mitsui & Co. Secure Directions Co., Ltd. provide training for the safe development, provision, and use of AI.

In this training, in addition to the attack methods explained in this column, you can understand various attack methods and countermeasures against AI through lectures and hands-on.

For details on this training and inquiries, please visit the AI Defense Institute .

* The authors of this column are as follows.

| Isao Takaesu (Mitsui & Co., Ltd. Secure Directions, Ltd.) Information processing security assistant. CISSP. He has been engaged in vulnerability diagnosis for web systems for over 10 years. He also focuses on AI security, researching vulnerabilities in machine learning algorithms and researching security task automation using machine learning. His research results have been presented at world-famous hacker conferences such as Black Hat Arsenal, DEFCON, and CODE BLUE. In recent years, he has contributed to the education business by serving as a lecturer at security camps and SECCON workshops, and as a judge at the AI security competition at Hack In The Box, an international hacker conference. |