Contents

- Introduction

- measuring human play

- Create a dataset

- Differentiation from past attempts

- Maia: A Better Solution to Match Human Skill Levels

- Maia’s prediction

- Modeling individual player styles with Maia

- Using AI to improve human chess play

- Acknowledgments

Introduction

Published November 30, 2020

Reid McIlroy-Young (PhD Candidate, University of Toronto), Ashton Anderson (Associate Professor, University of Toronto), Jon Kleinberg (Professor, Cornell University), Siddhartha Sen (Principal Scientist, Microsoft)

Artificial intelligence continues to advance rapidly, matching or exceeding human performance on benchmarks in an increasing number of task domains. Researchers in this field are therefore focused on human-AI interactions in the realm where both operate. Just as chess has served as a leading indicator on many central issues in the history of AI development, this game is designed to study how humans cooperate with and learn from AI. It is positioned as a model system for

AI-powered chess engines have consistently beaten human players since 2005, with the heuristic-based Stockfish engine coming in 2008 and the deep reinforcement learning-based AlphaZero engine coming in 2017. And so on, the world of chess has undergone further changes. The impact of this evolution is immeasurable. Despite the AI’s own chess-playing abilities continuing to improve, record numbers of people are now playing chess. Such changes provide a unique testbed for studying human-AI interaction. The combination of formidable AI chess prowess and the vastly increased human interest in the game has led to a wide variety of playstyles and player skill levels.

A lot of research has been done trying to match AI chess play to different human skill levels, but often the AI makes different decisions or moves pieces differently than human players of that skill level. This resulted in Our research goal is to bridge the gap between AI and human chess-playing abilities. The question for AI and its ability to learn is, “Can AI, at a given skill level, make the same detailed decisions as humans?” This question provides a good starting point for matching AI and human behavior in chess.

Our research team from the University of Toronto, Microsoft Research, and Cornell University has begun investigating ways to better match AI to different human skill levels and even personalize AI models to specific player playstyles. . Our research consists of two papers, ” Matching Human Behavior with Superhuman AI: Chess as a Model System ” and ” Learning Personalized Behaviors of Human Behavior in Chess ” and training on games played by humans. and consists of a new chess engine called Maia that makes it more human-like. Our research results show that AI can indeed predict human decision-making at various skill levels, even at the individual level. This work represents a step forward in modeling human decision-making in chess, and opens up new possibilities for collaboration and learning between humans and AI.

Unlike previous models that relied heavily on move libraries and information from past games to gain information about learning, AlphaZero allows you to practice against yourself with only knowledge of the rules (“self Play”), AI changed the way games are played. Our model, Maia, is a customized version of Leela Chess Zero (an open source implementation of AlphaZero). Instead of training Maia on a self-playing game with the goal of making the best move, we trained Maia with a game played by humans with the goal of making the most human-like move. We developed nine Maias to characterize human chess play at various skill levels. They correspond to nine skill levels with an Elo rating between 1100 and 1900 (Elo rating is a system for evaluating a player’s relative skill in games like chess). As you can see below, Maia matches human play more closely than any chess engine ever created.

If you’re interested, you can play several versions of Maia on Lichess, a popular open source online chess platform. Our bots on Lichess are named maia1, maia5 and maia9 and were trained on human games with Elo ratings of 1100, 1500 and 1900 respectively. These bots and other resources can be downloaded from our GitHub repository .

measuring human play

What does it mean for a chess engine to match human play? For our research purposes, we set a simple index as follows. What is the probability that a chess engine will pick a human move given the piece placement that occurred in a game played by a real human?

Building a chess engine that matches human play by this definition is a difficult task. Because the number of possible piece placements is astronomical, most of the piece placements you see in real games only happen once. With just four moves for each player, the potential number of piece placements is in the hundreds of billions. Furthermore, even at roughly the same skill level, human players have vastly different playstyles. Also, even if it’s the exact same player, even with the same piece placement, the second move may be different!

Create a dataset

In order to rigorously compare how well a chess engine matches human play, we need a good test set to evaluate the engine. So we created nine test sets, one for each narrow range of ratings. The method is as follows.

- First, I created rating bins for each 100-point range (1200-1299 points, 1300-1399 points, etc.).

- Each bin included all games in which both players played were in the same rating range.

- Ignoring games played at Bullet and HyperBullet speeds from each bin, we extracted 10,000 games. At these speeds (pointing to less than a minute per player), they tend to point to low quality moves to avoid losing out on time.

- In each game, I discarded the first 10 moves each player made to ignore the most memorable early moves.

- Also, I discarded all hands with less than 30 seconds remaining in the game. These moves were made only to finish the game (i.e., we avoided introducing random player moves into the test set).

According to the above restrictions, we got 9 test sets. Each rating range test set contained approximately 500,000 piece positions.

Differentiation from past attempts

For decades, attempts have been made to develop chess engines that closely match human play. One reason for such attempts is that a human-like chess engine could be a great sparring partner. But being squashed like a bug every game is no fun, so the most popular attempt to make an engine that matches human play has been to develop some sort of damped version of a powerful chess engine. rice field. Attenuated engines are created by limiting the engine’s capabilities in some way, such as by reducing the amount of data it learns from, or by limiting the depth of search for finding moves. For example, Lichess’s “play with computer” feature uses Stockfish ‘s suite of models with a limited number of look-ahead moves . Chess.com, ICC, FICS, and other platforms all have similar engines. How well do these engines match human play?

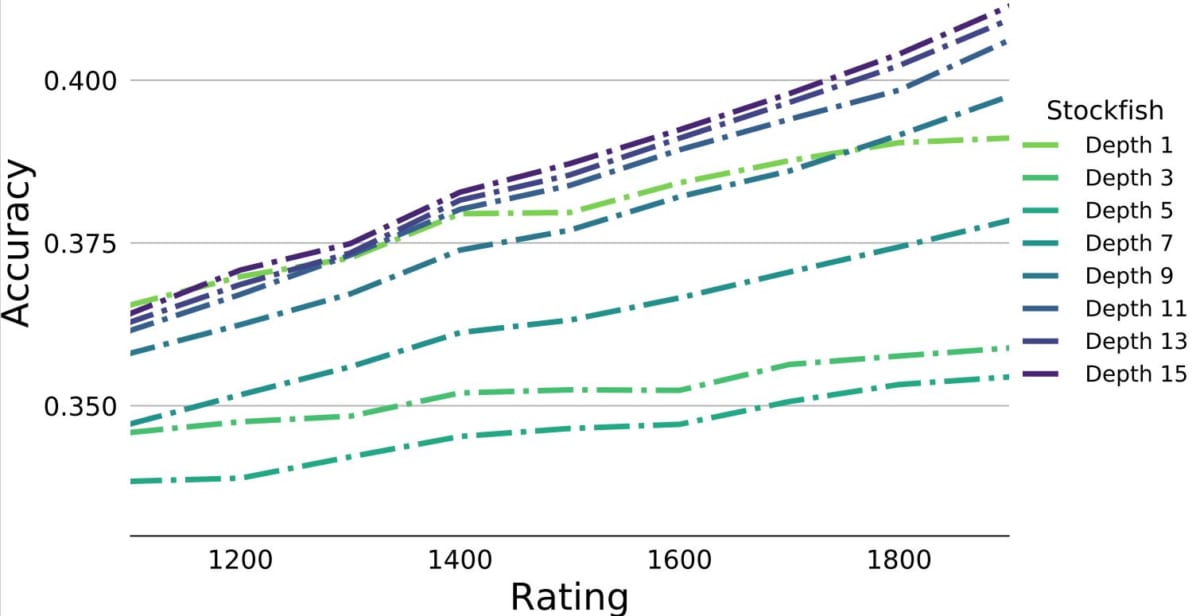

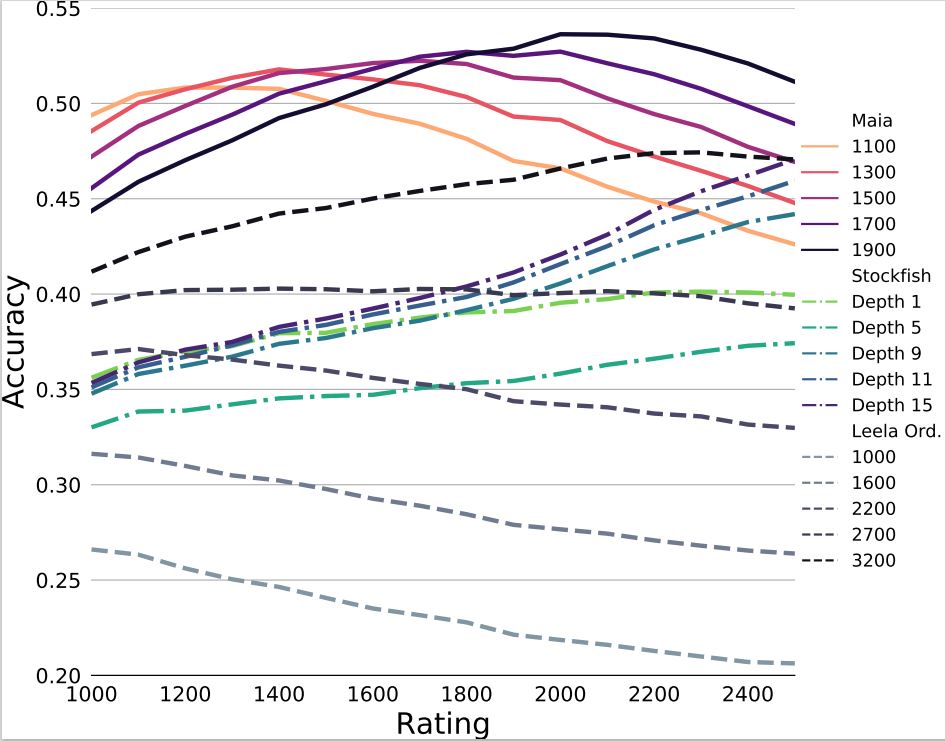

Stockfish: We created several attenuated versions of Stockfish for each depth limit (e.g. Stockfish at depth 3 can only look ahead 3 moves ahead) and tested them on the test set. In the graph below, I’ve broken out accuracy by rating level so you can see if the engine thinks like players of a certain skill level.

As you can see from the graph, it doesn’t go so well. Attenuated versions of Stockfish match human moves only about 35-40%. And just as importantly, the precision curve of each attenuated version is strictly increasing. In other words, Stockfish at depth 1 is better at matching a human player’s move at 1900 rating than at 1100 rating. The result is that attenuating Stockfish by limiting the depth it can search does not capture human play at low skill levels, but rather adds a lot of noise to normal Stockfish chess. It means that it looks like

Leela Chess Zero: Attenuated Stockfish fails to extract characteristics of human play at a certain level. What about Leela Chess Zero, an open-source implementation of AlphaZero that learns chess through self-play games and deep reinforcement learning ? Unlike Stockfish, Leela doesn’t incorporate any human knowledge into its design. But despite this, the chess community was very excited to see Leela playing more like a human player.

In the above analysis, several generations explored different versions of Leela and evaluated their relative skill (noting that early generation Leela played particularly human-like). It had been). The public was right that the best versions of Leela more often matched the human hand than Stockfish. But Leela doesn’t capture human play at different skill levels. In each version, matching accuracy always gets better or worse as the human skill level increases. Characterizing human play at a particular level requires a different approach.

Maia: A Better Solution to Match Human Skill Levels

Maia is a chess engine designed to play like a human at a specific skill level. To achieve matching with human moves, we adapted the AlphaZero/Leela Chess framework to learn from human games. He then created nine different versions, each with a rating range of 1100-1199 to 1900-1999. We created 9 training datasets in the same way we created the test dataset above, each containing 12 million games. We then trained a separate Maia model for each rating bin, producing 9 versions ranging from Maia 1100 to Maia 1900.

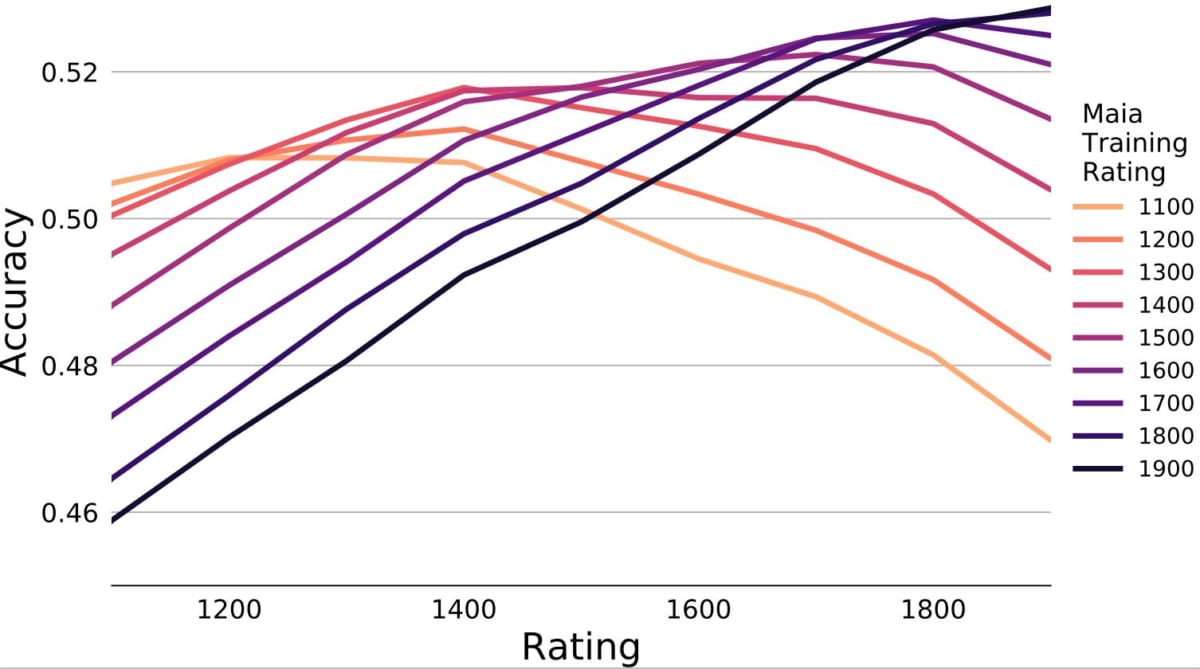

As you can see from the graph above, Maia’s results are qualitatively different from Stockfish and Leela. First, the matching performance of the move is significantly improved. Maia’s lowest accuracy is 46% when predicting a move of a player with a rating of 1100 after learning the move of a player with a rating of 1900. As high as the best performance achieved when tested. Maia’s top accuracy is over 52%. More than half of the moves Maia 1900 predicts match moves made by 1900 rated human players in real games.

Importantly, all versions of Maia with different ratings combined have a unique understanding of human play at a particular skill level. That’s because the accuracy curve shown by each version of Maia achieves the highest accuracy for various human ratings. Even the Maia 1100 achieves over 50% accuracy in predicting 1100 rated moves, which is far superior to predicting moves of 1900 rated players.

These results have profound implications for chess. It means that there is such a thing as “1100 rated style”. Moreover, such playstyles can be captured by machine learning models. This fact came as a surprise to us. Human play is a mixture of good and random bad moves, and a 1100 rated player might make bad moves more often, and a 1900 rated player might make bad moves less often. In that case, random bad moves are unpredictable, so it was considered impossible to capture a playstyle equivalent to a 1100 rating. But since we can predict human play at different levels, there is a reliable, predictable, and possibly algorithmically taught difference between one human skill level and that of an adjacent rating. I wonder if there is.

Maia’s prediction

Details are available in the paper, but one of the most exciting things about Maia is its ability to predict mistakes . For example, even when a human player makes the obvious mistake of “hanging” a queen – in other words, allowing an opponent to take the queen at will – Maia has an accuracy of 25% or better. Anticipate missteps. Maia’s accomplishments like this will be really valuable to the average player looking to improve their game. Maia looks at your game and tells you which mistakes are predictable and which are random. If your mistakes are predictable, you’ll know what to work on to get to the next level.

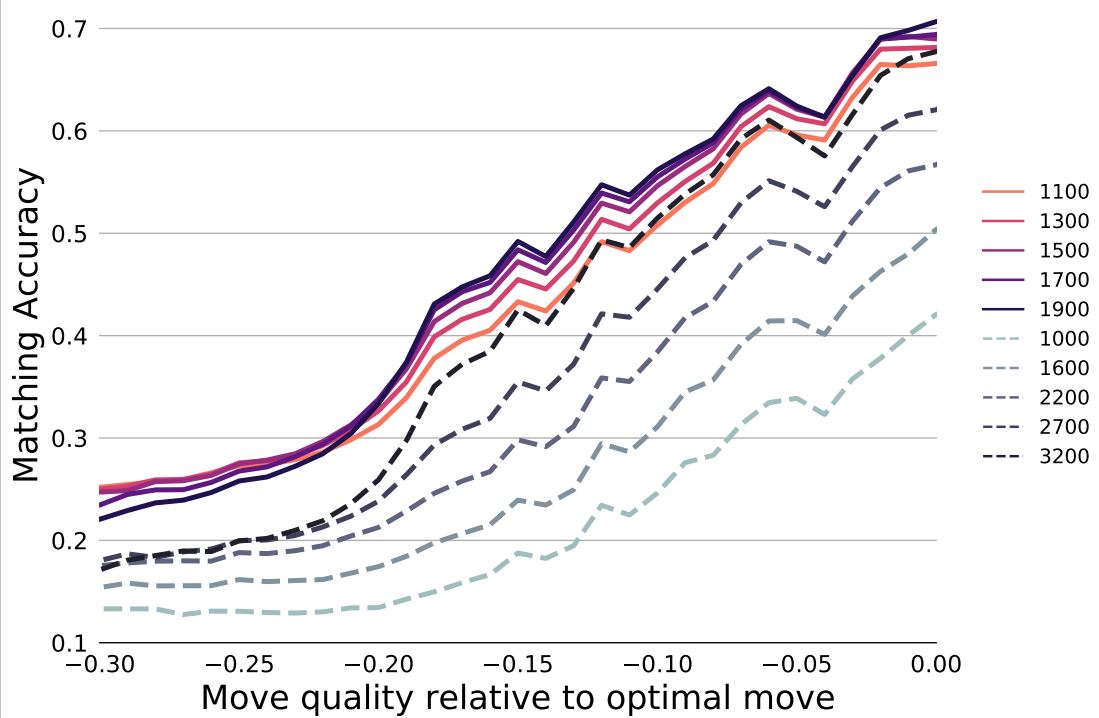

In the same paper, she defines a reproducible mistake as “a bad move that more than 10% of human players fall into when faced with a certain piece position,” and calls such a bad move a collective blunder. . Collecting data on mass blunders and making predictions yielded higher accuracy than irreproducible mistakes .

in all rating ranges , and (2) both Maia and Leela are easier to predict good moves than bad moves . .

Modeling individual player styles with Maia

Through these studies, we will push modeling of human chess play to the next level. Can we predict the moves made by individual human players ?

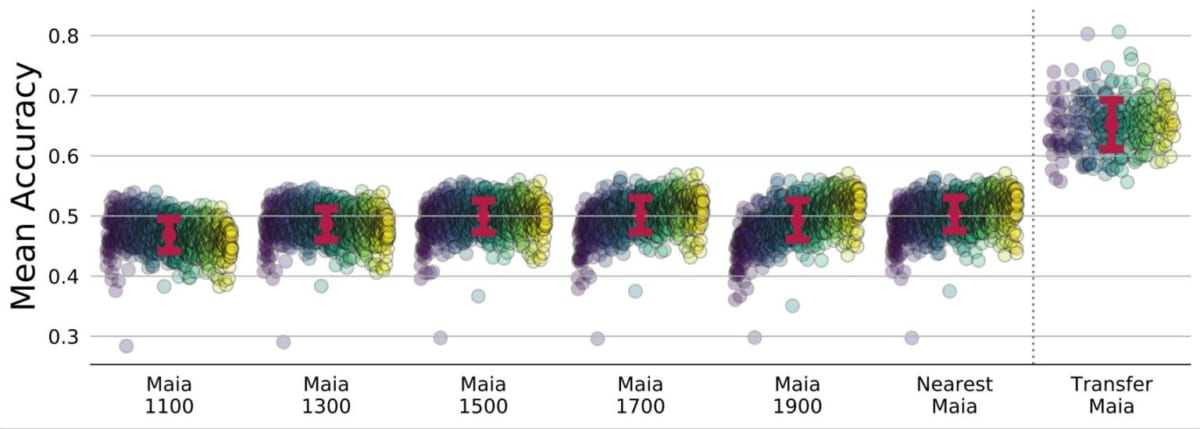

We found that personalizing Maia yielded the greatest performance gains. Some personalized models can predict individual movements with up to 75% accuracy, while the base Maia predicts about 50% of human movements.

We fine-tuned Maia to get these results. Starting with a base Maia, say Maia 1900, we updated the model by continuing to train on individual player games. Looking at the chart below, we can see that all of the personalized models show greater improvement than the non-personalized models when it comes to predicting individual player moves. The improvement is so large that the personalized model barely overlaps with the non-personalized one. Even the most unpredictable player’s personalized model gets close to 60% accuracy, and the unpersonalized model does not achieve 60% accuracy even with the most predictable player.

The personalized model is so accurate that given just a few games, it can tell which players have played them! In this philometric task aimed at recognizing individual playstyles , we trained a personalized model on 400 players of varying skill levels, drawing from the four games played by each player. Each model was asked to predict the move. In 96% of the four-game sets tested, the highest accuracy (i.e., predicting a player’s actual move most often) was achieved by a personalized model trained on the moves of players who played the game to predict. Met. Data from just four games can identify who played a set of 400 players. Personalized models accurately capture individual chess playstyles.

Using AI to improve human chess play

We designed Maia as a chess engine that predicted human moves at specific skill levels, but it evolved into a personalized engine that could identify games played by individual players. This achievement is an exciting step in understanding human chess play and brings us closer to our goal of creating an AI chess teaching tool that helps humans improve. Two of the many abilities of a good chess teacher are understanding how students of different skill levels play and recognizing individual student play styles. Maia shows that these capabilities are feasible using AI.

The ability to create personalized chess engines from publicly available individual player data raises an interesting discussion about the possible uses (and misuses) of this technology. We begin this discussion in our paper, but we have a long way to go to understand the full potential and implications of the body of research presented above. Chess, as it has always been, will be one game model for building the AI systems on which these debates take place.

In addition, as domains where applications of “AI that can predict human behavior in a certain domain with high accuracy” such as Maia are expected, there are (1) high-priced gambling, (2) online environment, (3) interaction with the real world. interactions. As an application example of domain (2), ” AI that plays video games like a human player ” is assumed. As an application example of (3), ” AI that controls a robot that behaves like a human being ” can be considered.

Acknowledgments

We would like to thank Lichess.org for providing the human games we used to train and for hosting our Maia models to compete against each other . Associate Professor Ashton Anderson is partially funded by an NSERC grant, a Microsoft Research benefit, and a CFI grant. Professor Jon Kleinberg has received support from a Simons Investigator Award, a Vannevar Bush Faculty Fellowship, a MURI grant, and a MacArthur Foundation grant.