Table of contents

- Introduction

- What is DALL·E and what can it do?

- How was DALL·E made?

- How “smart” is DALL·E?

- Zero-shot visual reasoning

- What does DALL·E stand for?

Introduction

Every few months, someone puts out a machine learning paper or demo that makes my mouth water. This month, I was blown away by OpenAI’s new image generation model , DALL·E .

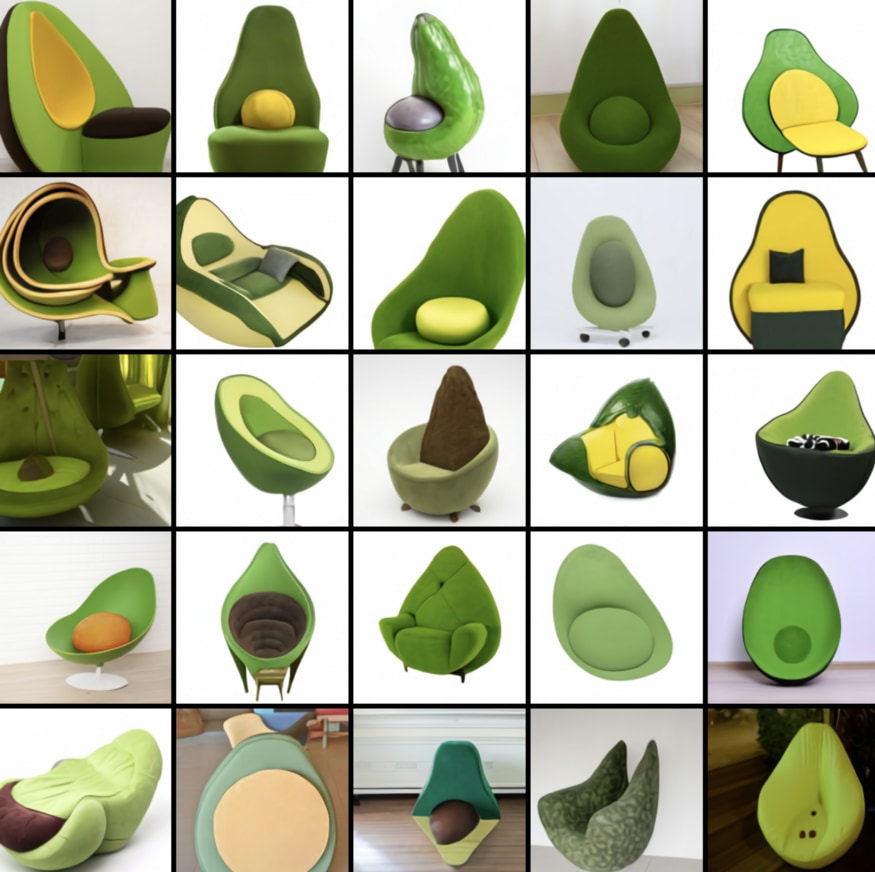

This 12 billion parameter giant neural network receives text captions (like “avocado-shaped armchair”) and generates images to match them.

These generated photos are pretty evocative (makes me want to buy one of those avocado chairs). But even more impressive is DALL·E’s ability to understand and express concepts of space, time, and logic (more on this later).

This article provides a quick overview of what DALL E can do, how it works, how it fits into the latest trends in machine learning models, and why it matters. explain. Let’s go.

What is DALL·E and what can it do?

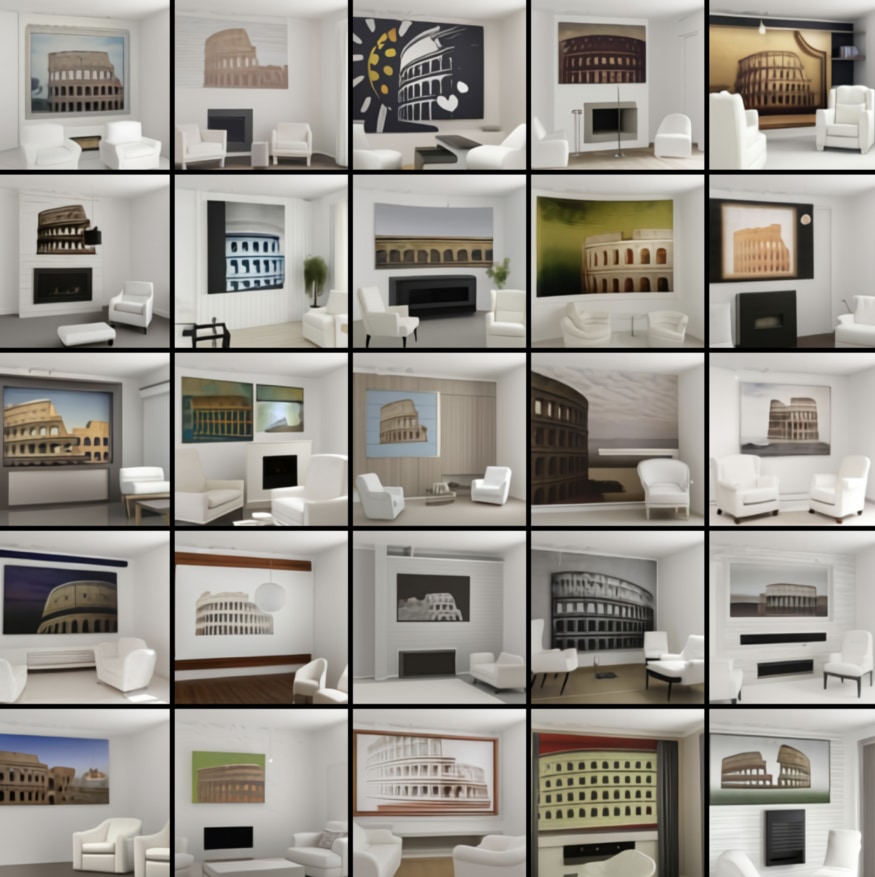

Last July, OpenAI, the creator of DALL E, announced a gigantic model similar to DALL E called GPT-3, surprising the world with its capabilities. GPT-3 was capable of human-like generation of text, including op eds, poems, sonnets, and computer code . DALL·E is a natural extension of GPT-3, parsing text prompts and responding with pictures rather than words. For example, an example from OpenAI’s blog renders an image like the one below from text entered at a prompt, such as “living room with two white armchairs and a picture of the Coliseum”.

The image above isn’t bad, is it? You can see how this output can help designers. Note that DALL·E can generate a large set of images from the prompt. The images are ranked by a second model released by OpenAI at the same time as DALL·E called CLIP , which attempts to determine which images are the best matches.

How was DALL·E made?

Unfortunately, OpenAI has not published a paper yet, so we don’t know the details. But at the core of DALL·E is the same new neural network architecture that powers recent advances in machine learning. Transformer was discovered in 2017 . This network can be scaled up and trained on large datasets, and is easy to parallelize. Transformer, in particular, has made breakthroughs in natural language processing (as it is the basis for models such as BERT, T5, and GPT-3 ), improved the quality of Google search results, He also achieved groundbreaking results in translation and even in protein structure prediction (*6).

Most of these large language models are trained on large text datasets (made from things like wikipedia and web crawls ). What makes DALL·E unique is that it is trained on sequences of word-pixel combinations. We don’t yet know what that dataset was (it presumably contained images and their captions), but it was undoubtedly huge.

(*Translation Note 5) On October 25, 2019, the US version of Google’s official blog post announced that BERT was introduced to Google search . BERT (Bidirectional Encoder Representations from Transformers) is a natural language processing model that bidirectionally utilizes Transformers. With the introduction of this model, it is now possible to search for long sentences. For a technical explanation of BERT and its impact on natural language processing research, see the translated article below.

AINOW translated article ” BERT Explanation: State-of-the-art language model for natural language processing ”

AINOW translated article ” 2019 was the year of BERT and Transformer ”

On November 30, 2020, Google-owned AI research institute DeepMind published a blog post about AlphaFold 2, a model that predicts protein structure . Prediction of protein structure is known as one of the most difficult problems in biology, and since 1994, a competition to solve this problem, CASP (Critical Assessment of protein Structure Prediction), has been held every other year. At CASP14 in 2020, AlphaFold 2 won with a score of over 90 out of 100 , and was praised for making great progress in solving a 50-year-old puzzle. The article’s author, Markowitz, points out in his article that the model uses Transformer . For the original version of AlphaFold, see the translated article below.

AINOW translated article ” AlphaFold: Harnessing AI for Scientific Discovery ”

How “smart” is DALL·E?

While the DALL·E results quoted above are impressive, questions always arise when training models on large datasets. A skeptical machine learning engineer asks if the results are simply high quality because they copied from the source image material or memorized the answer image. is quite natural.

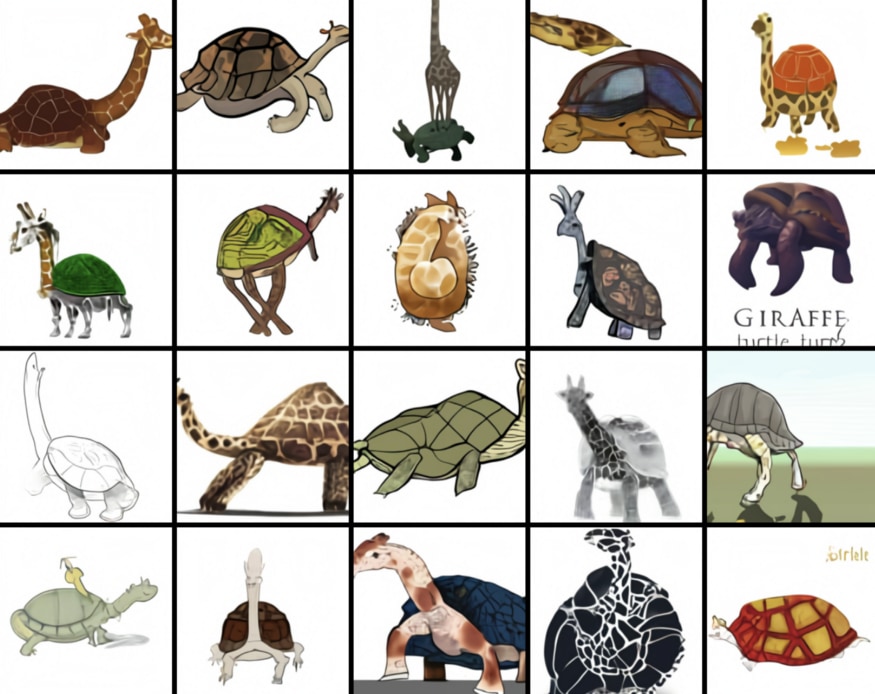

To prove that DALL·E is more than just an image repost, the OpenAI blog post authors tricked you into displaying an image from a rather unusual text prompt. Here are some such cases:

“Professional high-quality illustration depicting a chimera made from a giraffe and a turtle”

“A snail made of a harp”

The results are all the more impressive because it’s unlikely that the model encountered many giraffe-turtle hybrids in the training dataset.

Additionally, the strange text prompts exemplified in the blog post hint at the model’s added appeal. Its appeal is its ability to perform “zero-shot visual reasoning.”

Zero-shot visual reasoning

In machine learning, you typically train a model by giving it thousands or millions of examples of the tasks you want it to perform. In doing so, we hope that the model understands the patterns needed to perform its tasks.

For example, to train a model to identify dog breeds, a neural network can be shown thousands of photos of dogs labeled by breed, and then tested for its ability to tag new dog photos. be. This example is a work of limited scope and even feels archaic compared to OpenAI’s latest achievements.

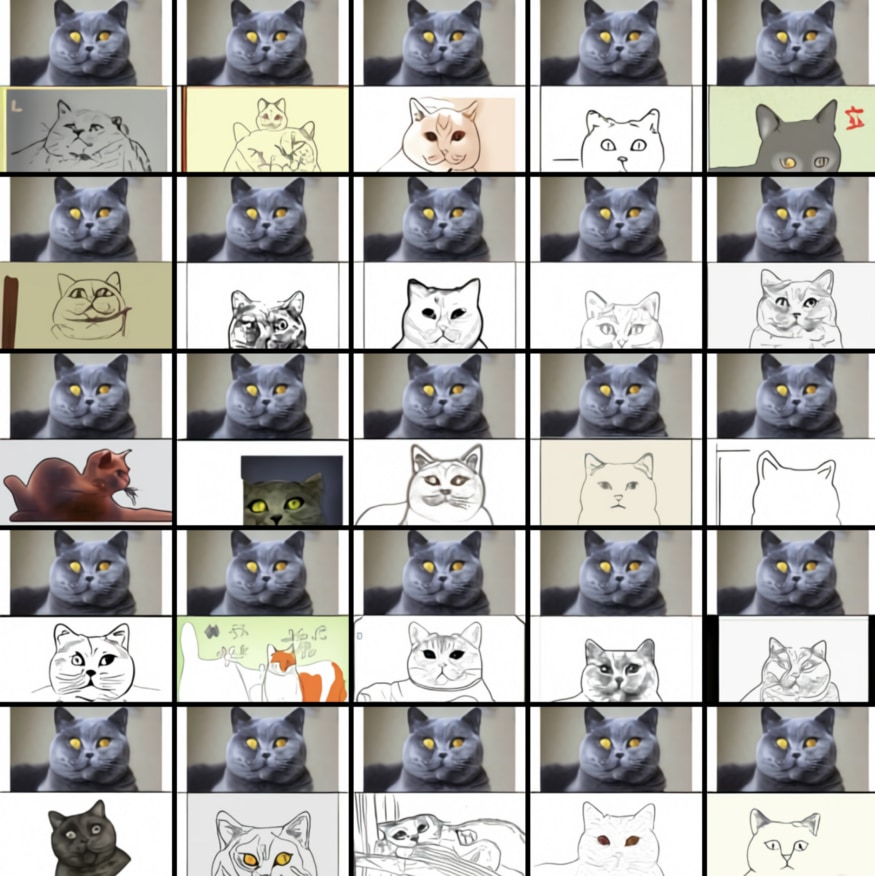

Zero-shot learning, on the other hand, is the ability of a model to perform a task for which it has not been specially trained. For example, DALL E was trained to generate images from captions. But with the right text prompts, you can convert images to sketches. The image below is such an example.

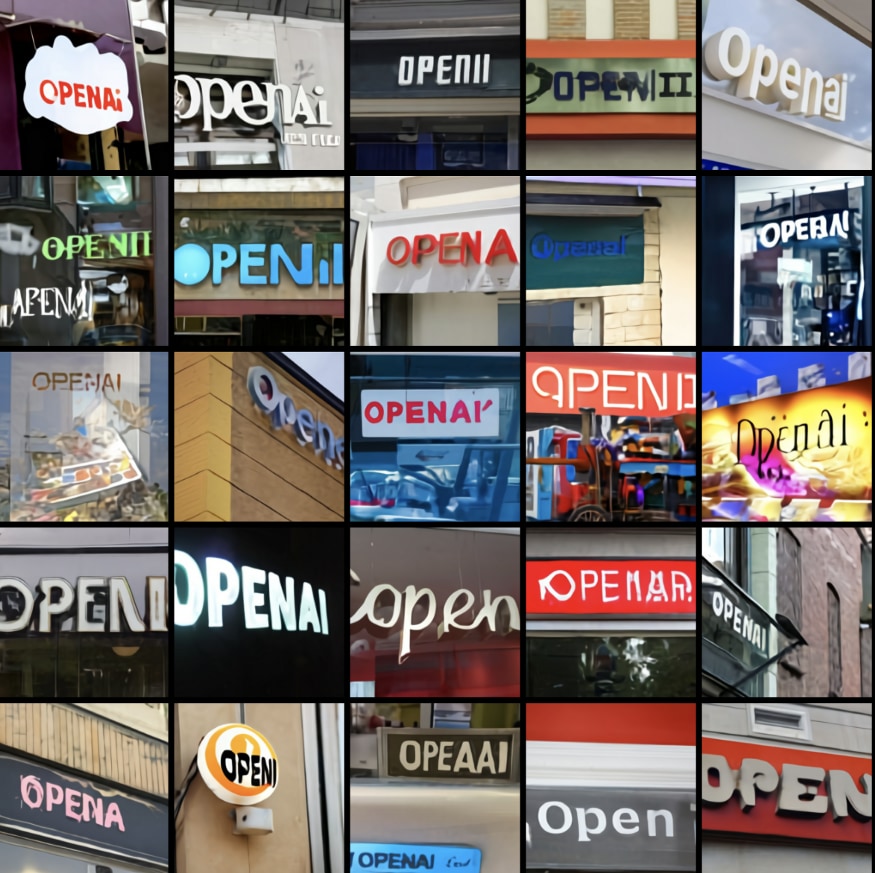

In addition, DALL·E can display custom text on road signs. See image below.

This way DALL•E can work much like Photoshop’s filters, even though it wasn’t specifically designed to work that way.

This model is based on visual concepts (such as “macroscopic” or “cross-section” in an image), locations (such as “pictures of Chinese food”), It even shows an “understanding” of time (such as “pictures” or “pictures of mobile phones from the 20’s”). For example, here’s what was spat out in response to the prompt “Pictures of Chinese food”:

In other words, DALL E can not only paint pretty pictures for captions, but in a sense, visually answer questions.

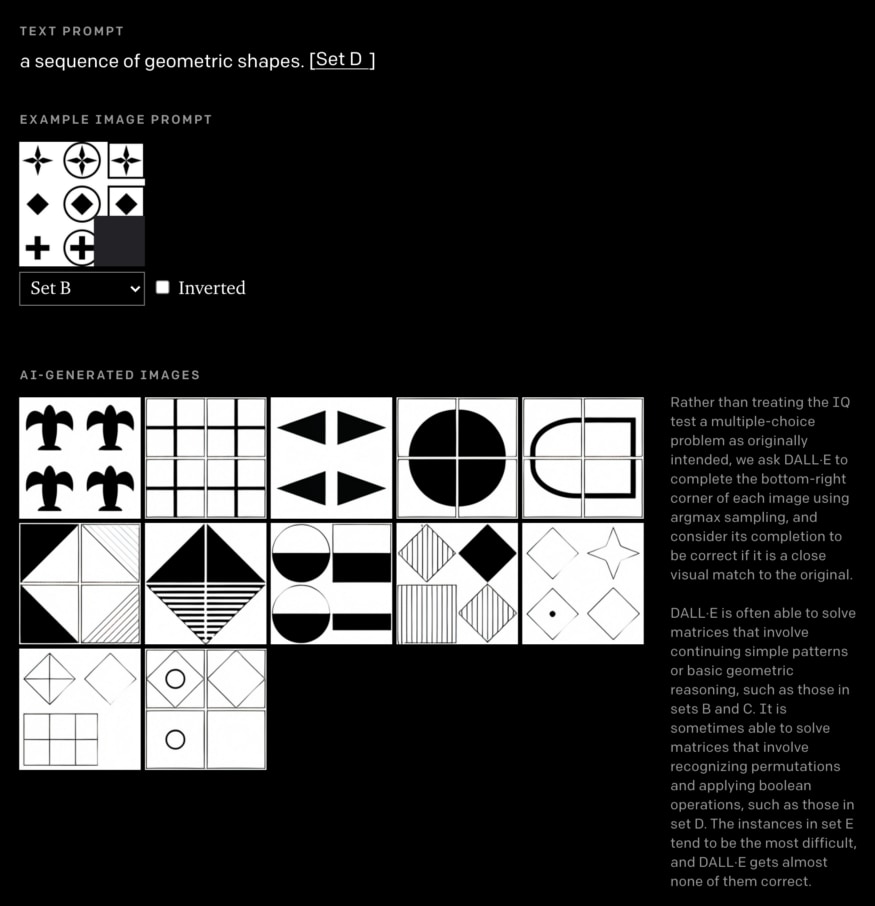

To test DALL·E’s visual reasoning ability, the authors of the blog post gave DALL·E a visual IQ test. In the example below, the model had to complete the bottom right corner of the grid, following a visual pattern hidden in the test.

“DALL E can often solve matrices containing simple patterns and basic geometric reasoning,” the authors wrote, and even found it to perform better on some problems than others. . DALL E’s abilities were also impaired when the colors of the visual puzzle were inverted, “suggesting that it may also be vulnerable in unexpected ways,” the authors say. I wrote.

What does DALL·E stand for?

The most impressive thing about the DALL·E is that it performs surprisingly well on a variety of tasks that the author never expected.

“DALL·E […] has been found to be able to perform several types of image-to-image translation tasks when prompted with text in an appropriate manner.

We didn’t expect this ability to emerge, and we didn’t make any changes to our neural networks or training procedures to encourage it. ”

These results were totally unexpected. DALL E and GPT-3 are two examples of a larger subject in deep learning. Extraordinarily large neural networks (as in the case of “self-supervised learning”) trained on unlabeled internet data are very general and not specially designed. I can do many things.

Of course, DALL·E should not be mistaken for general intelligence. It’s not hard to fool this kind of model . We’ll know more when this model becomes openly accessible and we can play around with it. That said, we can’t help but be excited until the public release.