Table of Contents

- 1 Generative Deep Learning

- 1.1 Autoencoder

- 1.2 GANs

- 1.3 Encoder-Decoder Models

- 1.4 High-definition facial image generation

- 1.5 Image style conversion

- 1.6 Super Resolution

- 1.7 Speech synthesis

- 2 Deepfake creation process

- 2.1 Generation of facial images

- 2.2 Training of face generation model

- 2.3 Speech Synthesis

1 Generative Deep Learning

There are several technologies that have greatly contributed to the evolution of deep learning technology, but one of the most important is the technical area of generative models. In terms of practical applications of machine learning, discriminative models are widely used to classify data. It is characterized by being able to generate [1].

Among generative models, those that use neural network technology such as deep learning are called deep generative models [2][3], and (variational) autoencoders and GANs are typical examples. Also, as a technique suitable for generating series data such as voice and text, there is a technique called an encoder/decoder model. In this white paper, we collectively refer to the large technological area that includes the three technologies that can generate these data as “ generative deep learning ”.

These techniques are very versatile and are capable of more than just image generation. Technology such as Style Transfer , which converts a live-action photograph into a painting-like image, SuperResolution, which converts a low -resolution image into a high-resolution image, and technology for generating text and voice. are also being developed. In this section, we will explain these technologies while looking back on the history of technological evolution.

1.1 Autoencoder

An autoencoder is a type of neural network model that is trained to restore (copy) the input data [4]. At first glance, the operation of outputting what is input seems meaningless, but the point is to once map the data into a highly compressed information space called latent space, and then restore it.

In this way, by converting data into highly compressed small pieces of information, data with a large amount of information (images, etc.) can be expressed with a smaller amount of information. etc.). In 1987, the idea of a neural network for reconstructing input was proposed [5]. In 2007, a research group at the University of Montreal led by Bengio et al. [6], it has drawn attention again in the context of deep learning.

In 2013, Kingma announced the Variational Auto-Encoder (VAE), which became widely recognized as the leading approach to deep generative modeling. Variational autoencoder technology is now the basis for many subsequent autoencoder approaches.

Autoencoders are used today for many purposes, not only for extracting important features from data, but also for anomaly detection to determine whether given data is normal or abnormal, It is used for a wide range of purposes, such as a recommendation system that suggests products that are likely to be purchased[7].

1.2 GANs

Generative adversarial network (GAN) is one of the representative techniques of generative deep learning devised by Ian Goodfellow et al.[8]. A GAN consists of two neural networks, a generator and a discriminator . The generator is responsible for the function of generating an image, and the discriminator is responsible for identifying whether the image is generated or genuine.

The generator is trained to generate more realistic images so that the classifier cannot detect them, and the classifier is trained to more accurately discriminate between genuine and fake images. be done. As these two neural network models continue to compete and improve, they encourage each other to evolve, ultimately giving the generator the ability to generate more realistic data.

The generator receives as input data random data created in a space in which information is highly compressed, called a latent space , and converts it into an image. Initially, the images generated by the generator are completely random images, but as the training of the model progresses, meaningful images are gradually output. You will be able to output an image close to

At this time, the first output from the generator is a meaningless random image, so it is relatively easy for the classifier to distinguish between the real image and the generated image. Since the images to be output are more sophisticated, it is necessary to find a more sophisticated discrimination mechanism (feature).

On the other hand, since the discrimination performance of the classifier is low at first, it is relatively easy for the generator to generate an image that cannot be detected as a fake. It is necessary to create a more advanced mechanism for generating images.

In this way, in GAN, two neural network models continue to compete with each other to improve their performance, and eventually it becomes possible to generate data that is close to the real thing.

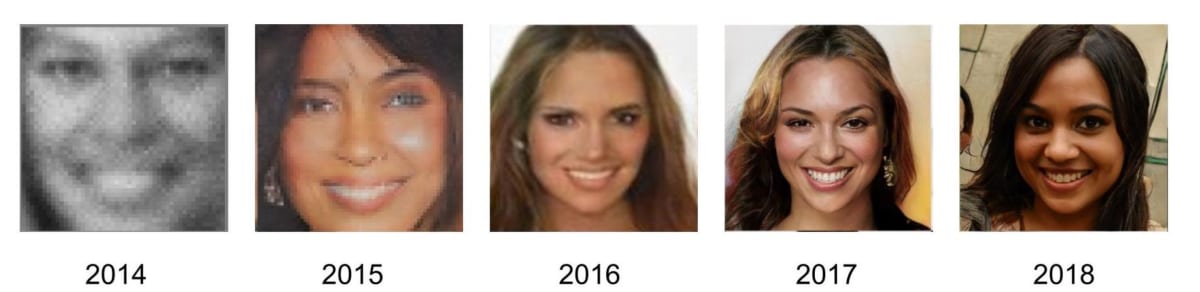

At the heart of the GAN idea is a unique, yet simple and powerful model training mechanism. Because of the simplicity of its mechanism, researchers and engineers around the world have accelerated its efforts to make various improvements, and as a result, today, a wide variety of models have been developed and released to the public. According to the site “The GAN Zoo” [9], which collects variants of GANs, there are more than 500 variations of GANs (updated in 2018). The performance evolution of facial image generation technology using GAN from 2014 to 2018 is shown below. [3].

1.3 Encoder-Decoder Models

As a concept similar to the autoencoder, there is the concept of Encoder-Decoder Models . An encoder-decoder model is a model structure of a neural network, suitable for transforming one series of data into another series of data. It has been developed mainly in areas such as natural language processing and speech processing.

A specific example of the application is machine translation (automatic translation). Machine translation is a general term for techniques for converting sentences written in one language into another sentence, and treats sentences as sequences of characters and words.

In machine translation using the encoder/decoder model, a neural network called an encoder analyzes the sequence of words in the conversion source sentence, and a neural network called a decoder generates the conversion destination sentence. there is

However, although the concepts and terms of encoder and decoder are used in the same way as autoencoders, neural networks with a structure suitable for handling series data are often used, and the internal structure is significantly different.

1.4 High-definition facial image generation

Image generation is one of GAN’s strengths, but facial image generation is rapidly gaining momentum because it is easy to obtain a large amount of homogeneous and high-quality data, and the problem setting is moderately limited. It is a field that has evolved into

One of the research groups that has led the development of technology to generate high-definition facial images is semiconductor manufacturer NVIDIA . Here, we introduce two models developed by NVIDIA.

First, ProgressiveGAN [12] is an epoch-making method that overcomes the weakness of conventional GANs that cannot generate high-resolution images . Conventional GANs have the problem of inconsistent performance, in addition to requiring enormous time and resources to train models that generate high-resolution images.

On the other hand, it was found that a model that generates low-resolution images can be trained stably in a short time. Therefore, in Progressive GAN, we start with a low-resolution model, gradually increase the size of the model, and train it to output high-resolution images. It is now possible to generate high resolution images.

A year later, NVIDIA announced StyleGAN[13], a GAN developed from Progressive GAN.

By introducing two networks, the Mapping Network and the Synthesis Network, StyleGAN extracts features that affect the image due to attributes such as age and gender, and analyzes important styles and noises that are not. It is now possible to train data while sorting out

As a result, it has become possible to generate images of higher quality than conventional GANs, and it has become possible to generate high-definition facial images as shown at the beginning of this white paper.

1.5 Image style conversion

In early GANs, data was generated randomly, so it was not possible to control what data was output. Conditional GAN (hereinafter referred to as CGAN) [14], announced in 2014, is a technology that can generate data while controlling attributes by giving attribute information in addition to images during training. As a result, for example, it is possible to generate a face image by designating attributes such as age and face shape.

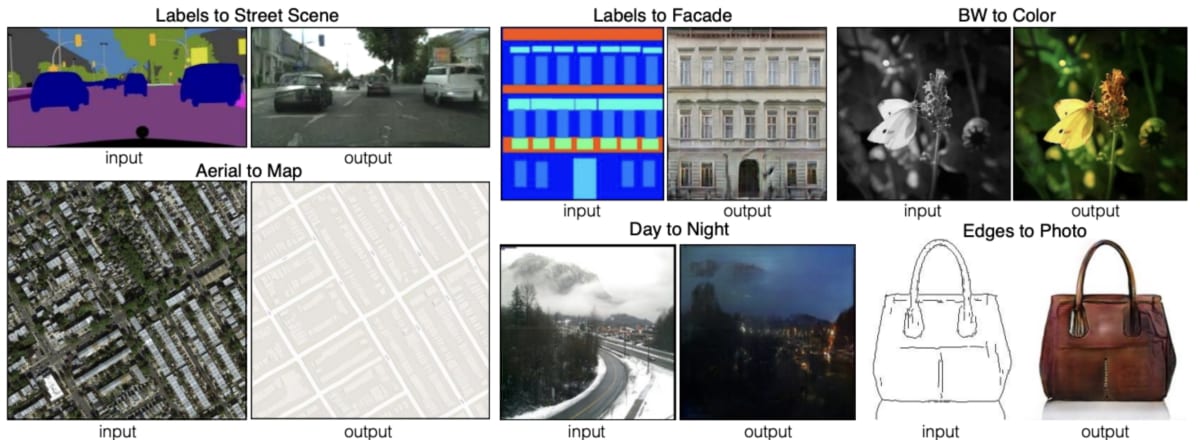

Pix2Pix[15], which was announced later, is a technology that realizes ” style conversion ” that converts one type of image to another by using CGAN internally . By using Pix2Pix, for example, it is possible to convert line drawings into actual images and vice versa.

Pix2Pix was effective when two styles of images were available in pairs, but in reality it is generally difficult to obtain datasets containing a large number of such paired images. However, there is a problem that the application is limited.

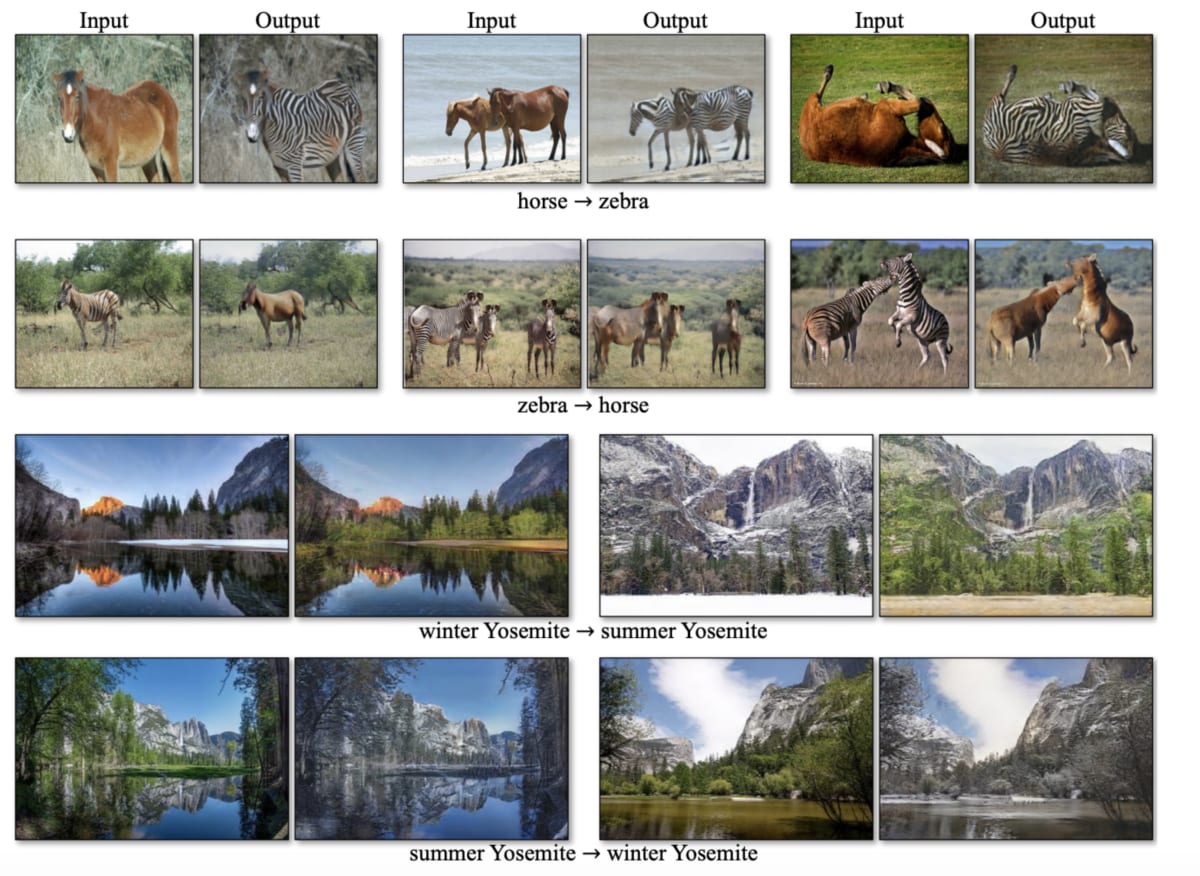

CycleGAN[16] solves this problem and enables style conversion even for data that does not have paired images. In CycleGAN, in order to train the relationship between images, in addition to having two pairs of generators and classifiers trained on different style datasets, the transform → inverse transform is performed as a series of processes to restore the original image. Train the model to do so.

This made it possible to convert between two styles without having a common pair of images for the two styles.

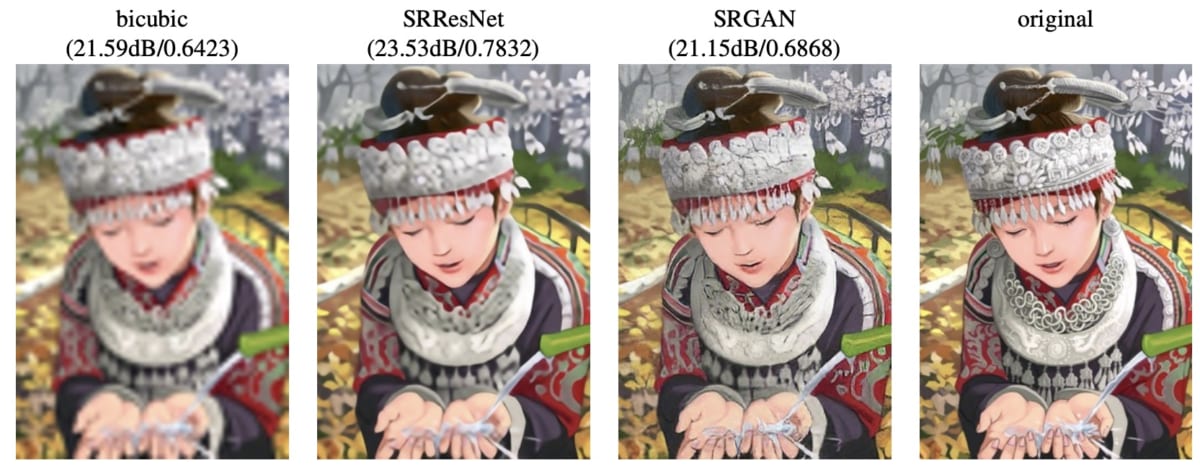

1.6 Super Resolution

As a technique for converting a low-resolution image into a high-definition image, there is a technique called ” Super Resolution “.

SRGAN[17] is a pioneering study using GANs for super-resolution. Train a model using data pairs of high and low resolution images. First, the generator attempts to generate high definition images from low resolution images.

Next, the high-resolution image prepared in advance and the image generated by the generator are passed to the classifier, and it is determined whether the generated image is genuine or not. The generator is trained to output images that are closer to the real high-definition image, and the discriminator is trained adversarially to determine whether the generated image is genuine.

By repeating this training process, eventually the generator will be able to generate high resolution images from low resolution images. As shown in the figure below, SRGAN can produce high-quality super-resolution images that are almost indistinguishable from the original high-definition images.

After the advent of SRGAN, higher-performance super-resolution techniques such as RankSRGAN[18] and PULSE[19] have emerged, and some of these techniques have been incorporated into deepfake implementations.

1.7 Speech synthesis

One of the representative studies on speech synthesis is WaveNet[20]. WaveNet is an encoder/decoder model announced by DeepMind in 2016 that can generate raw speech waveforms.

WaveNet was able to express the intonation of long sentences by adopting an architecture that improved the convolutional neural network (CNN), which was demonstrating high performance in the field of image processing at the time. In addition to being able to synthesize natural voices, it is now possible to switch between multiple speakers and control tones and emotions.

Subsequent improved versions have been put to practical use in various places, such as the Google Assistant and Google Cloud’s multilingual speech synthesis service.

Tacotron[21][22] developed this technology. The above WaveNet combines several components such as 1) text analysis, acoustic model, and speech synthesis to output the final synthesized speech. The Tacotron is composed of these, and its performance has improved dramatically.

In this way, from input to output, many processes are performed in one model, so it is called end-to-end speech synthesis technology.

As an advanced form of speech synthesis technology, a technology has been developed in which the voice of a specific speaker is simultaneously received as an input, synthesized and output as if the person were speaking. This is voice cloning, a technique often used in deepfakes.

“SV2TTS”[23] is one of the representative examples of such voice cloning technology, and is a technology developed from WaveNet and Tacotron. It has become.

2 Deepfake creation process

2.1 Generation of facial images

The creation of fake videos was technically possible before the spread of deep learning technology and GAN technology, but it was generally a difficult task that required specialized skills and a lot of labor.

In particular, compositing each frame in a natural way was a difficult task that required a lot of patience. Another technical issue was that unnatural parts would inevitably remain because humans manually processed each frame.

However, by using deepfake technology, it has become possible to easily create high-quality, natural videos in a short time if you have data and a certain amount of PC.

Famous deepfake implementations include DeepFaceLab[24][25], FaceSwap[26], faceswap-GAN[27] (development stopped in 2019). The face image processing process in .

- face image extraction

- Model training for facial image conversion

- Face image conversion processing

- post-processing

First, a series of processing starts from identifying the location and orientation of the face and extracting the face image (step 1). Here, we mainly use deep learning technology as a model for image recognition to detect the face and each part from the image, acquire landmarks such as the eyes and nose from the face, and determine the direction and size of the face. identify the In this process, a technique called segmentation is used to identify the face part pixel by pixel and extract the face image [24].

Next is model training (step 2) for facial image conversion. Using the face images of the two people to be transformed as a dataset, an autoencoder is used to train a model (step 2) that generates (reconstructs) each face image of the two people [24].

At this time, if a large amount of face data to be replaced can be used for training, the performance will be improved and more natural moving images can be created. For this part, DeepFaceLab and FaceSwap mainly use autoencoders, and faceswap-GAN mainly uses GANs.

Then, in the face image conversion process (step 3), a target face is generated for the replacement target location using the face generation model used in learning. Finally, as post-processing (step 4), there is the process of blending and sharpening [24].

In blending, more natural output can be obtained by processing such as changing the size of the replaced face, adjusting the seams of the background, blurring the outline of the face, and smoothing the seams of the replaced face. .

Then, in sharpening, the output is converted into a high-definition image using techniques such as super-resolution.

2.2 Training of face generation model

Among the series of deepfake processes, step 2 “model training for face image conversion” and step 3 “face image conversion processing” are the main processes, so they will be explained in more detail.

There are several types of implementations of this part, such as those that use GANs, but here we use an autoencoder as a relatively common method that is used in some famous deepfake implementations. How to use it will be explained as an example.

First, assuming that A is the person to be converted and B is the person to be converted, one common encoder and two different decoders corresponding to each person are prepared. Next, in order to generate face images of persons A and B separately, the decoders are divided into two, but one encoder is shared.

As mentioned above, an autoencoder is a model that has the ability to extract the important features of the input information (image), express it with a small amount of information, and finally restore it again.

By supplying a large number of person’s face images to the encoder/decoder, a decoder that can generate each person’s face image can be realized. At this time, the encoder extracts important attributes (face orientation, etc.) as features for expressing the face image with a small amount of information. A corresponding face image can be generated.

Based on the features extracted from the conversion source image of person A, a corresponding conversion target image of person B is generated, and the conversion process is realized by embedding the face in the corresponding part.

By extracting the important features of the input information (image), it is a model that has the ability to express with a small amount of information and finally restore it again. By supplying a large number of person’s face images to the encoder/decoder, a decoder that can generate each person’s face image can be realized.

At this time, the encoder extracts important attributes (face orientation, etc.) as features for expressing the face image with a small amount of information. A corresponding face image can be generated.

Based on the features extracted from the conversion source image of person A, a corresponding conversion target image of person B is generated, and the conversion process is realized by embedding the face in the corresponding part.

The network structure that has two decoders and a common encoder, which is used in the deepfake implementation, is a technology that was originally developed mainly in the field of machine translation around 2017-2018. By using one common encoder for two decoders, the encoder can obtain a language-independent universal representation [28].

Later, such techniques were adopted in deepfake implementations to achieve natural transformations.

2.3 Speech Synthesis

In deepfake, voice clone technology such as SV2TTS mentioned above is used to synthesize voice of arbitrary content with a specified voice (voice of a specific person). This section uses SV2TTS as an example to explain the process flow of speech synthesis.

SV2TTS is a model that is roughly divided into three neural networks. First, we construct a “ SpeakerEncoder ”, which is a neural network that learns the characteristics of speaking styles from a large amount of speech data of speakers .

A large and diverse speaker dataset is required for high performance. The network takes as input the speech information of the person to be cloned, and outputs vector information that characterizes the person’s speaking style.

The next neural network, “ Synthesizer ,” receives the feature information of the speaker and what he wants to say as text information. spectrogram).

For this part, it is possible to use Tacotron2 [22], which is a kind of encoder/decoder model, and train the model by pairing the text information and the target sound source.

Finally, the neural network ” Vocoder ” receives the output speech information, synthesizes the voice waveform, and outputs it. WaveNet[20] and WaveGlow[29] can be used for this part, and by using these well-developed models, it is possible to output natural speech.