Table of Contents

- How AutoML Evolves the Applied Machine Learning Landscape

- What Do Data Scientists Do?

- What Does Automated Machine Learning (AutoML) Do?

- Who Is AutoML Really Good For?

- For the future of collaboration

How AutoML Evolves the Applied Machine Learning Landscape

Readers of this article already know what AutoML, or automated machine learning, is. It’s a tool built by Google to fully automate machine learning pipelines, and Microsoft and Amazon also have their own implementations in the cloud.

Automated machine learning is built to search broadly and deeply against a vast landscape of models and hyperparameters to find the best model and feature extraction for a problem. It not only automates the majority of current machine learning projects, it also makes it relatively easy to get started. Depending on your organization’s technical infrastructure, someone with software or cloud experience can (with AutoML) run their own models very easily at scale.

Given the current state of AutoML described above and low-code/no-code tools sweeping the industry, I see many articles claiming that AutoML will replace data scientists. I don’t really agree with this claim. In this article, I will clarify my thoughts on what will happen with further introduction of AutoML.

What Do Data Scientists Do?

While there is considerable variation across companies and domains, data scientists typically work with business leaders to experiment and build machine learning models to provide statistical insights and guide high-value business decisions. do. Such work means acting like a high-level technical consultant, both internally and externally.

The entire lifecycle of these projects begins with data engineers gathering data from myriad systems and validating that data into appropriate formats such as databases, data lakes, and data warehouses. And when a business need arises, the data scientist is responsible for understanding the problem at hand and what data can be the solution. Such work requires back-and-forth with both data engineers and business leaders to ensure appropriate sources for retrieving data, quality limits, etc.

Data scientists then typically build Proof of Concept models that emphasize experimentation rather than scalability. This PoC stage is very iterative, random and exploratory. At this stage, collaboration with data analysts becomes more intimate. The output of this stage is to identify the initial dominant features, data dependencies, working model, optimal hyperparameters, model/project limits, etc. A data scientist must have a repertoire of myriad statistical and algorithmic techniques to tackle the problem at hand. Once we have enough confidence in the PoC, we will refactor the code we have written so far to optimize performance and move the model into production.

During the implementation phase, we will work with machine learning engineers to help get the model into production and set up appropriate model monitoring. Post-implementation work is typically a collaboration between data scientists and machine learning engineers. Finally, the data scientist gives a presentation that briefly and clearly describes the model, the results of that model, and how it is relevant to the business.

Each task performed by a data scientist in turn, explained above, has its own depth and challenges, but the operation and management of the entire life cycle is applied machine learning. Additionally, data projects are typically iterative rather than linear. It is very helpful to have all the roles taken from the beginning, as each stage often proves necessary to review the previous stage. Finally, depending on the size of your organization and the resources available, it’s easy for one person to fill multiple roles. Here are the most common role combinations I’ve seen (as part of a data scientist role):

- Data Scientist /Machine Learning Engineer: Usually perform better by creating scalable ML systems that operate in domains far beyond Jupyter notebooks. It is basically in this sense that companies are looking for ” data scientists “.

- Data Scientist /Data Analyst: Usually good at speed and experimentation. Most likely you will work as a lead analyst or product DS.

- Data Scientist /Data Analyst/Machine Learning Engineer: Usually found in senior, lead and principal class engineers. A master at multiple tasks.

What Does Automated Machine Learning (AutoML) Do?

AutoML automates the entire machine learning workflow.

(Automation of machine learning) should preferably be done within cloud infrastructure such as Google Cloud Platform, Azure Machine Learning, and Amazon SageMaker. AutoML works to replace all the manual parts of model tuning and experimentation. The replacement part is what today’s data scientists do in their search to find the best model and its hyperparameters for the task they are modeling. AutoML’s main function is to optimize metrics and iterate until you get the best results (or meet your exit criteria), so it also handles iterative work.

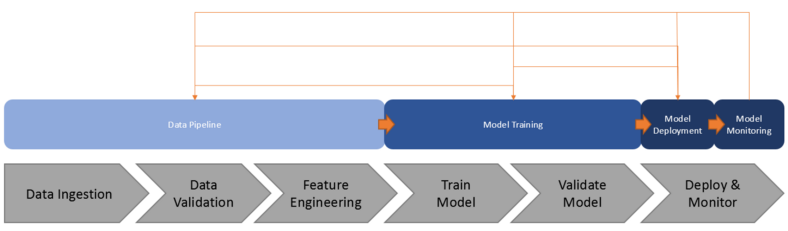

Image Source: Author

(*Translation Note 2) If you translate the diagram that visualizes the above machine learning model development procedure, the machine learning model development procedure will be as follows

Once a model is trained, it can be easily deployed to a production environment on the cloud, where monitoring checks for the model, such as precision-recall curves and feature importance, are set up. .

The underlying algorithms that AutoML learns are those that have already been learned, mostly by data scientists/learning management environments. But there is typically little transparency about how the offerings in these cloud environments actually perform AutoML. That said, state-of-the-art and ensemble models are often used and often perform as well as hand-crafted models.

The integration of data visualization and feature extraction into AutoML is very useful. Called AutoML, the system can identify important feature intersections, appropriate transformations, and key visualizations during operation.

Finally, these cloud platforms are specific AIs with AutoML for use cases such as computer vision (e.g. object detection), natural language processing (e.g. optical character recognition) and time series (e.g. prediction). It also has deep learning products from

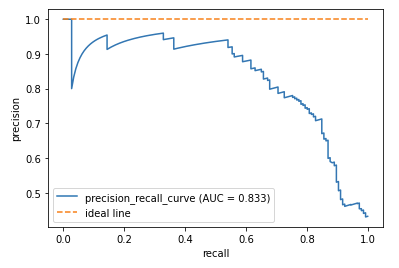

(*Translation Note 3) A precision-recall curve is a curve used when considering both precision and recall , which are metrics for evaluating a machine learning model . In general, it is called a PR curve , taking the initials of the English word Precision, which means precision, and Recall, which means recall . With precision on the vertical axis and recall on the horizontal axis, the larger the area under the curve, the better the prediction (see image below). PR curves are used for predictive evaluation of highly biased data such as fault detection .PR curve

Image Source: Quoted from codeexa ” Machine Learning Evaluation Index Classification: Precise rate, recall rate, and AUC (ROC curve, PR curve) explained ”

Who Is AutoML Really Good For?

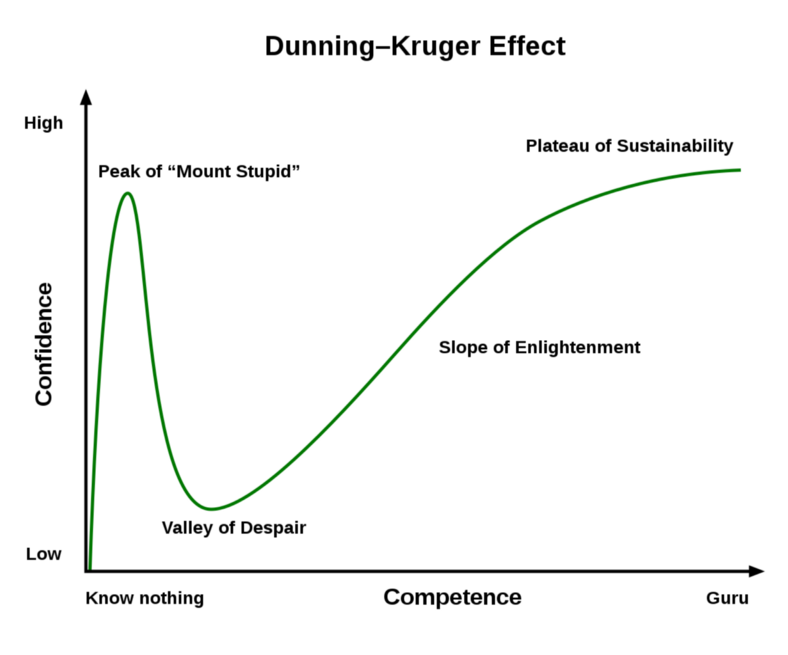

It’s really easy to read the above and say, “Well, data scientists are clearly useless.” I argue the exact opposite. The low-code/no-code movement is really exciting, especially with the widespread adoption of cloud platforms. Highly technical tasks have become drag-and-drop, lowering the barriers to entry for beginners. In fact, it has become so easy that people feel that data science work can be done by anyone, and there is little debate about the quality or comprehension of the work. The downside of low-code/no-code tools is that they tend to fall into the “overconfidence, undercompetence” stage of the Dunning Kruger effect.

Image Source: Public Domain

By abstracting highly technical and complicated work, the barrier to entry is lowered, but on the other hand, it is easy for beginners to get stuck. Once AutoML can run on unlimited cloud computing, we can ask which models are best for the task at hand, why certain models fail for our business use cases, and why we prioritize metrics according to the problem. You can learn whether it is necessary to attach The answers to these questions begin to shed light on AutoML’s true winners, but first let’s draw a similar comparison.

Tableau is one of the most popular data analytics products today. I started my career on this product, got my certifications and am a big fan of this tool. It’s a great product because it makes what used to be very technical and time-consuming work easy with drag and drop. You can easily create bar charts, pie charts, complex dashboards, simulated web pages, and more. Thank you for giving me incredible power as a beginner. But let’s be honest, a lot of my first dashboards were really bad. It didn’t encourage action or insightful thinking, and was more of a “fun to watch” than a story that encouraged action. Even so, it was easy to take advantage of the tool’s ease of use and assert its expertise. However, as his career progressed, his master’s program deepened his understanding of color theory, the limits of vision, design for purpose, and how to build data visualizations with code. This understanding helped me get through the novice trough, but it would have been much easier to remain an expert. And even now, I’ve seen a ton of Tableau dashboards that feel effective “because I love their design” when they’re not effective at all.

Tableau made it easy for anyone to become a data analyst, but it also proved that most people can’t do it well. This view is not meant to be offensive, but to recognize that good quality data analysis cannot be reduced to drag-and-drop tools. More than being able to create a bar chart in one click, investing time and effort in learning the right way leads to deep professionalism.

AutoML helps data scientists like no one else. Every time I heard that (with AutoML) you could easily get someone with no ML experience, such as an MBA, to do data science work, it seemed pretty ridiculous to me. AutoML’s primary user base is usually experts, although the abstraction of the technical complexity makes it easy for beginners. AutoML automates the most tedious manual tasks, but the work is much broader and deeper than just optimizing the accuracy of ensemble models.

The Dunning-Kruger effect is a hypothesis about a cognitive bias in which people with low ability overestimate their abilities. A graph cited in the explanation of this hypothesis visualizes the correlation between knowledge and confidence, with knowledge on the horizontal axis and confidence on the vertical axis (see graph below).

Four stages over the correlation between knowledge and confidence in the Dunning-Kruger effect

Image Source: HR BLOG “ What is the Dunning-Kruger Effect?” Characteristics of vulnerable people and how to deal with them

According to the Dunning-Kruger Effect, in acquiring knowledge in any field, we go through four stages:

|

For the future of collaboration

Every seasoned data scientist I know is completely unconcerned with AutoML and is rather excited about it. They think so because they can’t wait for the tedious and repetitive parts of the PoC work to be automated, and they don’t have real value in doing the tedious work. Because I know AutoML can interpret recommendations from the model to business leaders about what actions to take (printing metrics is not interpretation), and the possibility and availability of new features that are not present in the dataset. They don’t understand how and how to integrate machine learning models into larger systems like models and software built for the entire enterprise.

There are examples of untrained ML professionals using AutoML, putting it into production, and adding millions of dollars of value to their business. This success does not come from AutoML, but from the company’s data culture and infrastructure. I’ve read that Google is “killing data scientists” with AutoML, but what few people know is that none other than Google’s data scientists are using AutoML. If you’re going to be on the “citizen data scientist” bandwagon in most companies, you’ll need a central authority that can oversee what models are being created, why, and how they’re performing.

That said, AutoML kills certain types of jobs. It is the “pseudo data scientist” who is killed. Fake data scientists and learning management environments are stuck in the “overconfidence, undercompetence” stage, refusing to learn, grow, and evolve beyond. AutoML will significantly devalue them, relegating them to a function of analysts and learning management environments. Citizen data scientists are all different, but as long as expectations are managed, you’ll be fine. Just as you wouldn’t expect high-quality results from citizen doctors and lawyers, you shouldn’t expect much from citizen data scientists either.

Many parts of this article may have been a little sensational, but I think AutoML in particular will redefine roles and responsibilities. I would not argue that the role of the data scientist or learning management environment would remain completely intact (even with AutoML). But their expertise will be much more needed and much more appreciated than it is today. The prevalence of tools like AutoML is a sign of the unanimous need for this skill, and those who can operate this tool at a high level within their skill set are among the following 10: It will be the winner of Applied Machine Learning in 2019.