Table of contents

- An overview of common design patterns in building successful machine learning solutions

- Introduction

- MLOps design pattern

- Workflow pipeline

- Feature store

- Transform

- Multimodal input

- Cascade

- Conclusion

An overview of common design patterns in building successful machine learning solutions

Introduction

A design pattern is a set of MLOps and reusable solutions to common problems. Data science, software development, architecture and other engineering disciplines consist of many recurring problems. So categorizing the most common problems, making them easily recognizable, and providing different forms of blueprints for solving them would be of great broad benefit to the community.

The idea of using design patterns in software development came from “Design Patterns: Elements of Reusable Object-Oriented Software” by Erich Gamma et al . “Learning Design Patterns” [2] applied to machine learning processes.

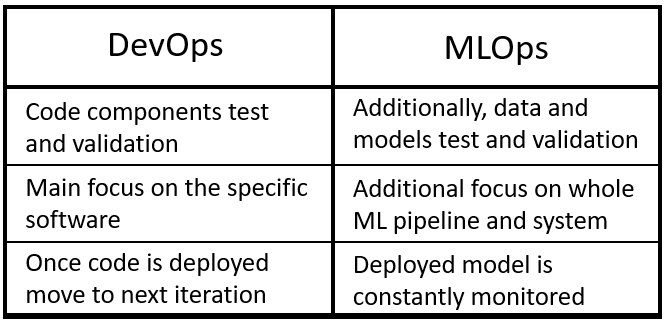

This article introduces various design patterns that make up MLOps . MLOps (Machine Learning -> Operations) are a set of processes designed to transform experimental machine learning models into productized services with the purpose of making real-world decisions. MLOps is based on the same principles as DevOps, but with a greater focus on data validation and ongoing training and evaluation (Figure 1).

Exhibit 1: DevOps and MLOps (by author)

(*Translation Note 3) Below is the Japanese translation of Chart 1.

| DevOps | MLOps |

| Code component testing and verification | In addition to the left, model testing and validation |

| Focus primarily on software in specific areas | In addition to the left, focus on ML pipelines and systems in general |

| Once the code is implemented, move on to the next iteration | Implemented models are continuously monitored |

Key benefits of leveraging MLOps include:

- Faster time to market (faster implementation).

- Improved model robustness (e.g. easier identification of data drift, allowing model retraining).

- More flexibility in training/comparing different ML models.

DevOps , on the other hand , emphasizes two key concepts for software development. The two are continuous integration (CI) and continuous delivery (CD). Continuous integration is the use of a central repository as a means for teams to work together on projects, keeping the process of adding, testing, and validating new code as close as possible as new code is added by different team members. It is to automate. In this way, you can test at any time whether the various parts of your application are able to communicate correctly with each other, and any form of error can be identified as early as possible. Continuous delivery focuses on smoothly updating software implementations and tries to avoid downtime as much as possible.

MLOps design pattern

workflow pipeline

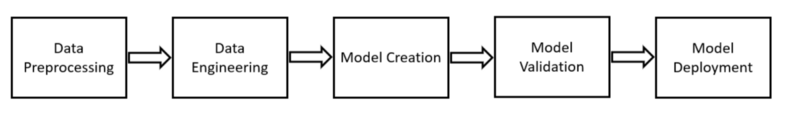

A machine learning (ML) project goes through various steps (Figure 2).

(*Translation Note 5) Below is the Japanese version of Chart 2.

When prototyping a new model, it is common to code the entire process in one ( monolithic ) script at first, but as the project becomes more complex and many team members are involved, , comes the need to split each step of the project into separate scripts ( microservices ). Taking such an approach has the following advantages:

- It makes it easier to change and experiment with different step orchestrations.

- You can make the project scalable by changing the definition (new steps can be added/removed easily).

- Each team member can focus on different steps in the flow.

- You can create separate artifacts for different steps.

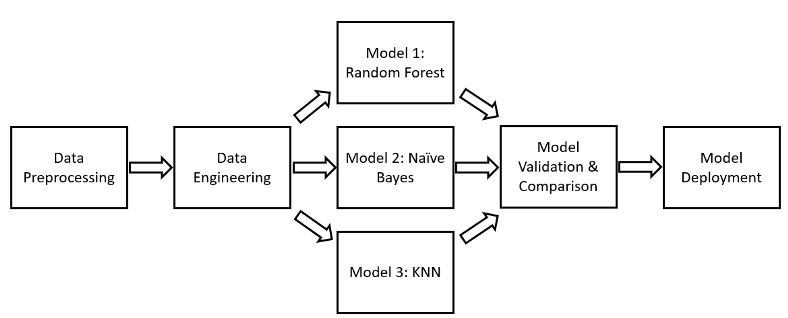

The Workflow Pipeline design pattern aims to define a blueprint for creating ML pipelines. An ML pipeline can be represented as a Directed Acyclic Graph ( DAG ) where each step is characterized by a container (Figure 3).

(*Translation Note 6) The Japanese version of Figure 3 is below.

By following this structure, you can build reproducible and manageable ML processes. By using the workflow pipeline, the following benefits can be obtained.

- By adding or removing steps from the flow, you can create complex experiments to test different preprocessing techniques, machine learning models, and hyperparameters.

- By storing the output of each step separately, we can avoid rerunning the first step of the pipeline if changes are made in the last step (thus saving time and computing power).

- If an error occurs, you can easily see which steps need to be updated.

- Pipelines implemented in production using CI/CD can be scheduled to rerun based on a variety of factors, including time intervals, external triggers, and changes in ML metrics.

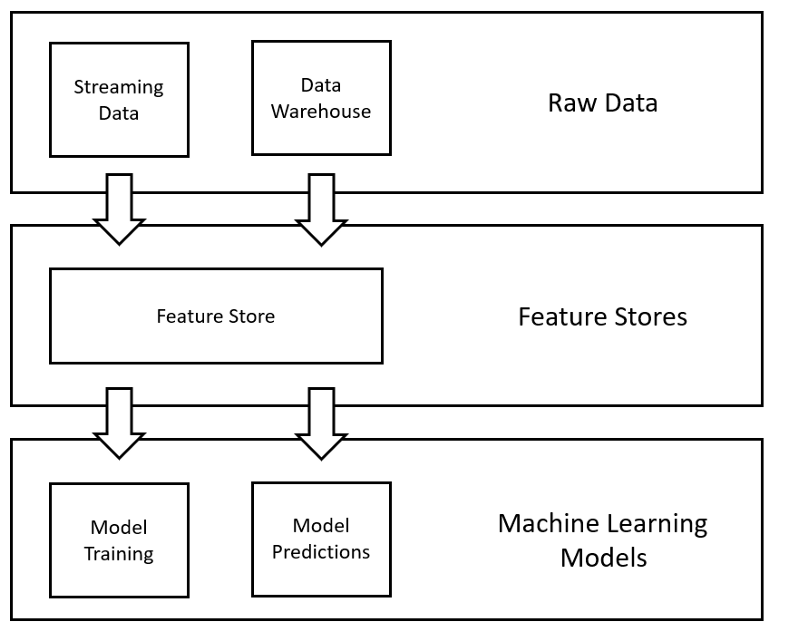

Feature store

Feature stores are data management layers designed for machine learning processes (Figure 4). The primary use of this design pattern is to simplify how organizations manage and utilize machine learning features. This is achieved by creating some kind of central repository that the company uses to store all the features created in the ML process. This way, data scientists needing the same subset of features in different ML projects don’t have to go through the (time-consuming) process of converting raw data to processed features multiple times. Two popular open source feature store solutions are Feast and Hopsworks .

(*Translation Note 7) Below is the Japanese translation of Chart 4.

For more information on the Feature Store, please refer to our previous article .

(*Translation Note 8) The author of this article, Ippolito, posted on Medium, ” Starting a Feature Store, ” lists the following seven benefits of using this design pattern:

7 Benefits of Using Feature Store

|

Transform

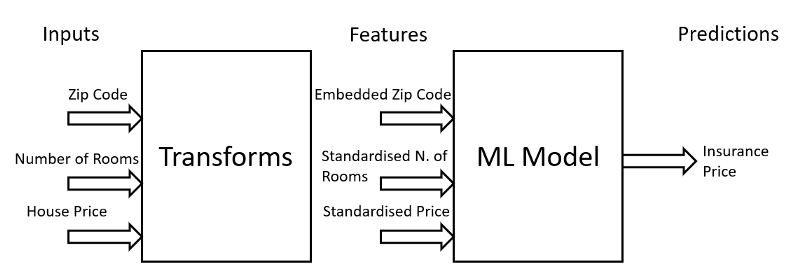

The transform design pattern aims to facilitate the implementation and maintenance of production machine learning models by keeping inputs, features, and transformations as separate entities (Figure 5). In fact, raw data usually need to be subjected to various preprocessing in order to be used as input for machine learning models, and in preprocessing these data before inference, in order to reuse a series of transform operations must be saved.

(*Translation Note 9) Below is the Japanese version of Chart 5.

For example, it is common to apply normalization/standardization techniques to numerical data to handle outliers or make the data more Gaussian before training the ML model. These transformations can be saved and reused in the future when new data becomes available for inference. If these transformations are not preserved, the input data used to train the ML model and the input data used for inference will have different distributions, resulting in data skew between training and serving. will occur.

Another option is to use the feature store design pattern to avoid bias between training and serving time.

Multimodal input

ML models can be trained on different types of data, such as images, text, and numbers, but some models only accept certain types of input data. For example, Resnet-50 can only take images as input data, while other ML models like KNN (K-Nearest Neighbors) can only take numbers as input data.

Solving ML problems may require the use of different forms of input data. In such cases, some transformation needs to be done to bring different types of input data into a common representation (multimodal input design pattern). For example, suppose you enter a combination of text, numeric, and categorical data. To enable ML models to learn, techniques such as sentiment analysis, bag of words, and word embeddings are used to convert text data into numerical format, and categorical data is similarly encoded in one-shot encoding. Convert. This way, all the data is in the same format (numerical) so that it can be used to train the model.

Cascade

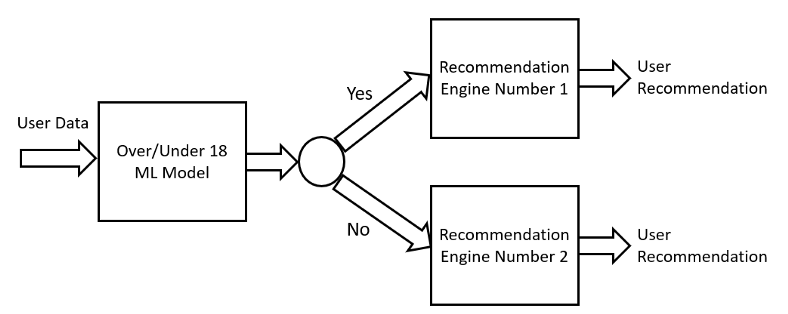

Sometimes an ML problem cannot be solved with just one ML model. In such cases, it is necessary to create a series of dependent ML models to achieve the ultimate goal. For example, let’s think about predicting what products to recommend to a certain user (Figure 6). To solve this problem, we first create a model that predicts whether a user is over or under 18, and depending on the response from this model, we run two different ML recommendation engines (e.g. designed to endorse products, or designed to endorse products to people under the age of 18).

(*Translation Note 10) Below is the Japanese translation of Chart 6.

To build such a cascade of ML models, we need to train them together. In fact, these models are dependent on each other, so if the first model changes (while the others are not updated), the subsequent models can become unstable. Such an update process can be automated using the workflow pipeline design pattern.

Conclusion

In this article, we’ve explored some of the most common design patterns that underpin MLOps. Readers interested in learning more about machine learning design patterns can find additional information in this talk by Valliappa Lakshmanan at AIDevFest20, as well as the GitHub repository published by the aforementioned book Design Patterns in Machine Learning .