Contents

- Introduction

- Vision Transformer

- Scale-up

- High-performance large-scale image recognition

- Visualization

- summary

- Gratitude

Introduction

Thursday, December 3, 2020

Posted by Neil Houlsby, Dirk Weissenborn, Google Research Researcher

Convolutional Neural Networks (CNNs for short) have been used in computer vision since the 1980s , but didn’t come to the forefront until 2012 when AlexNet outperformed then-state-of-the-art image recognition methods by a wide margin. rice field. This breakthrough was made possible by the following two factors. (i) the availability of training sets like ImageNet , and (ii) the use of commoditized GPU hardware, which provides significantly more computational resources for training. Thus, since 2012, CNN has become the model of choice for vision tasks.

The advantage of using a CNN is that it avoids the need for hand-designed visual features and instead trains to perform tasks directly using the data “end-to-end”. However, while CNNs avoid manual feature extraction, they can be computationally expensive as the architecture itself is designed specifically for images. As we look to the next generation of scalable vision models, do we need such domain-specific designs, or can we leverage more domain-agnostic and computationally efficient architectures to achieve state-of-the-art results? A question may arise.

As a first step in the direction of constructing more computationally efficient vision models, we develop Vision Transformer (ViT), a vision model based as closely as possible on Transformer ‘s architecture designed for text-based tasks. present. ViT represents the input image as a sequence of image patches, similar to the sequence of word embeddings used in applying Transformers to text, and directly predicts the image’s classification label. ViT performs well when trained on sufficient data, outperforming comparable state-of-the-art CNNs with a quarter of the computational resources. We have open-sourced both the code and the model to facilitate further research in this area .

Vision Transformer

The original Transformer for Text takes a sequence of words as input and uses it for classification , translation , or other natural language processing tasks. For ViT, we made as few changes as possible to the design of Transformer so that it works directly with images instead of words, and observed how well the model can learn about image structure.

ViT divides the image into a grid of square patches. Each patch is flattened into a single vector by concatenating the channels of all pixels in the patch and linearly projecting it to the desired input dimension. Since Transformer does not know the structure of the input elements in advance, it adds learnable position embeddings to each patch, allowing the model to learn the structure of the image. Prior to training, ViT does not know the relative positions of patches in the image, or even that the image has a 2D structure. ViT must learn information about relative positions from training data and encode structural information in position embeddings.

Scale-up

We first trained ViT on ImageNet, where the highest score was 77.9% top-1 accuracy. This score is not bad for a first attempt, but the best CNN currently has an accuracy of 85.8% without adding extra data to ImageNet. Despite mitigation strategies ( such as regularization ), ViT overfits the ImageNet task due to its lack of a priori knowledge of the image.

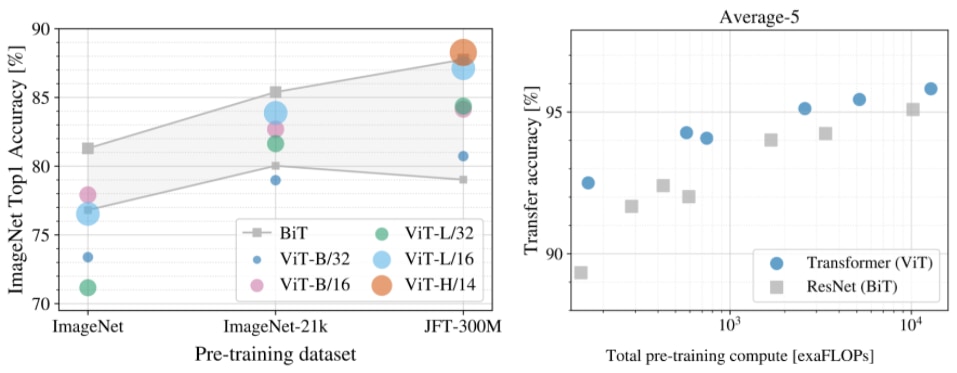

To investigate the effect of dataset size on model performance, we trained ViT on ImageNet-21k (14M images, 21k classification classes) and JFT (300 million images, 18k classification classes) and obtained the highest We compared the results with the state- of-the-art CNN, Big Transfer (BiT). As previously observed, ViT performed significantly worse than the equivalent CNN (BiT) when trained on ImageNet (1M images). However, ImageNet-21k (14M images) showed comparable performance, and JFT (300M images) showed better performance than BiT.

Finally, we investigated the effect of computational complexity in training the model. For this, several different ViT models and CNNs were trained on JFT. These models span different model sizes and training periods. As a result, the amount of computation required for learning varied. We find that ViT outperforms comparable CNNs given the same amount of computation.

High-performance large-scale image recognition

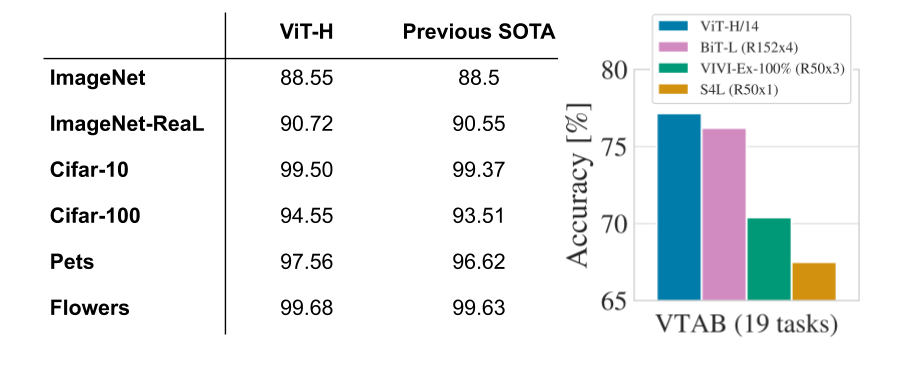

Our experimental data show that (1) with sufficient training, ViT can perform very well, and (2) ViT has excellent performance and computational complexity at both small and large computational scales. It suggests that there are trade-offs. Therefore, we trained a 600M-parameter ViT model to see if the performance improvement continues at larger scales.

This large-scale ViT model performed state-of-the-art on multiple popular benchmarks, including 88.55% top-1 accuracy on ImageNet and 99.50% top-1 accuracy on CIFAR-10. ViT also performed well on the cleaned-up version of the ImageNet evaluation set , “ImageNet-Real,” achieving a top-1 accuracy of 90.72%. Finally, ViT performed well on a wide variety of tasks even with few training data points. For example, in the VTAB-1k suite (19 tasks of 1,000 data points each), ViT achieved 77.63%, significantly outperforming the state-of-the-art single model (SOTA) (76.3%), and the multi-model ensemble It also matched the state-of-the-art accuracy (77.6%) achieved in Most importantly, these results are obtained with less computational resources compared to previous state-of-the-art CNNs. For example, the computational complexity of a pre-trained BiT model is a quarter of that of a state-of-the-art CNN.

Visualization

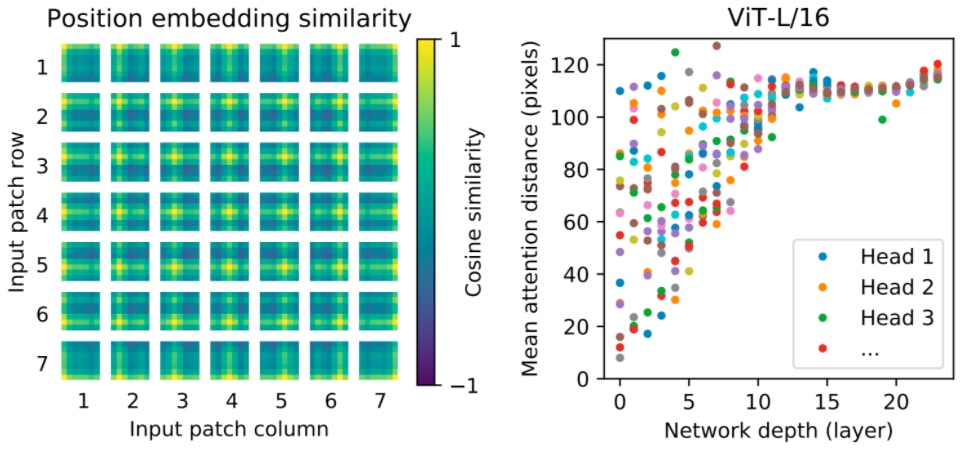

Visualize some of the inner workings of the model to get an intuitive understanding of what the model learns. First, looking at the position embeddings, the parameters the model learns to encode the relative positions of patches, shows that ViT can reproduce intuitive image structures. Each position embedding is most similar in the same row and column, indicating that the model recovers the grid structure of the original image. Second, for each Transformer block, we examined the average spatial distance between any elements with attention to another block. In the higher layers (depth 10-20) only global features are used (i.e. large attention distance), while in the lower layers (depth 0-5) the average attention distance spans a large range. Both global and local features are captured, as shown in . In contrast, only local features are shown in the lower layers of CNN. These experiments show that ViT can learn features hard-coded into the CNN (such as grid structure recognition), but more general patterns, such as local features in the lower layers and global ones, can be learned. It also shows that patterns such as mixtures can be learned at will to aid generalization.

Left: ViT learns a grid-like structure of image patches via its positional embeddings. Right: The bottom layer of ViT contains both global and local features. The lower layer of ViT contains both global and local features, while the upper layer contains only global features.

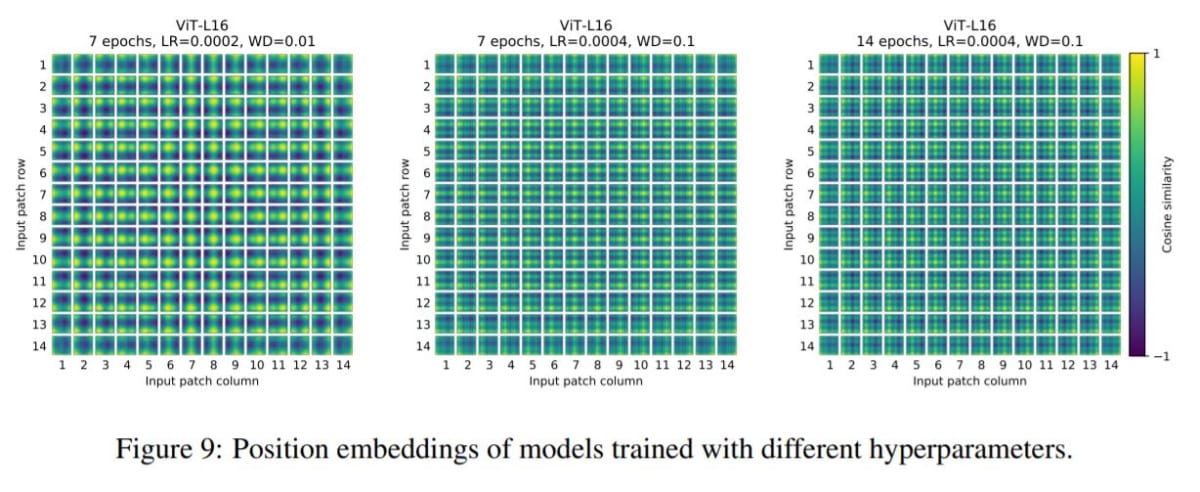

In the appendix of the preprint paper discussing ViT, ” Images are equivalent to 16 x 16 words: Transformers for large-scale image recognition ,” the training results of position embeddings with varying hyperparameters are presented. visualized . The three images quoted below visualize the distribution of cosine similarity between patches when 16-layer ViT is trained with different learning conditions as follows. The relative positional relationship between patches can be visually reconstructed from the cosine similarity distribution.

- Left: epoch 7, learning rate 0.0002, Weight Decay 0.01

- Center: epoch 7, learning rate 0.0004, Weight Decay 0.1

- Right: epoch 7, learning rate 0.0004, Weight Decay 0.1

It can be seen that the cosine similarity distribution differs when the hyperparameters for learning are changed .

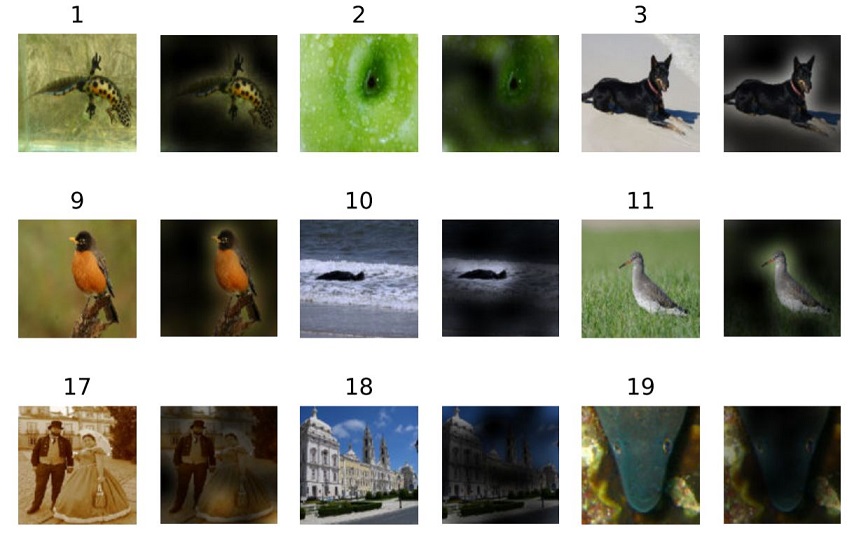

The appendix also includes images highlighting areas of ViT’s attention . This image shows what part of the image ViT focused on to perform the classification. Below are some of those images.

summary

Although CNNs have revolutionized computer vision, our results show that specialized models for imaging tasks may not be necessary or optimal. As dataset sizes continue to grow and unsupervised and semi-supervised methods continue to be developed, it becomes increasingly important to develop new vision architectures that learn more efficiently on these datasets. ViT is the first step toward a versatile and scalable architecture that can solve many vision tasks or tasks in many domains, and future development is expected.

Preprint papers of research results , as well as code and models , are publicly available.

Gratitude

Thanks to the following co-authors in Berlin, Zurich and Amsterdam. Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, and Jakob Uszkoreit. Thanks also to Andreas SteinerJoan for his help with infrastructure and open source. Thanks also to Joan Puigcerver and his Maxim Neumannand for working on the large-scale learning infrastructure, and to Dmitry Lepikhin, Aravindh Mahendran, Daniel Keysers, Mario Lučić, Noam Shazeer, and Colin Raffel for helpful discussions. . And thanks for the helpful discussion with Colin Raffel. Finally, thanks to Tom Small for creating the Visual Transformer animation for this post.